Since the double-slit interference pattern can be moved by altering the light delay in one of the slits, you must have realized that if we can control the delay/phase of the radio signal before it leaves the antenna, we might be able to direct the beam where we want it. Indeed, this is the basic principle of beamforming. By applying delay/phase to the signal per antenna, we can shape the beam as desired. Next, let’s start from a simple case: applying phases of 0 and π to the the two rod antennas in the previous article. Run the following command:

python3 -c "from beamforminglib import *; ant_array_beam_pattern(freq_hz=2450e6, array_style='linear', num_ant=2, ant_spacing_wavelength=0.5, beamforming_vec_rad=np.array([0, np.pi]))"

The figure on the right is obtained by the above command, with the left showing the original beam.

Similar to the double-slit interference case, in this case, there is no signal at 0 degrees (to the right).

Since the beam can be directed towards 0 and 90 degrees by applying phases (0 and π) to the two antennas, wouldn’t it be possible to do beam scanning by using some intermediate phase values? The answer is YES. The following command demonstrates beam scanning by continuously changing the phase of the 2nd antenna from -π to π with step size π/8 while keeping the phase of the 1st antenna 0:

python3 test_linear2_bf_scan.py

If you open the test_linear2_bf_scan.py, you will find: it calls the same python function ant_array_beam_pattern continuously and gives a series of phase (-π to π with step size π/8) to the 2nd antenna via the 2nd element of the argument beamforming_vec_rad, which are listed in the following table:

| time | phase ant0 | phase ant1 |

|---|---|---|

| 0 | 0 | -π |

| 1 | 0 | -7π/8 |

| 2 | 0 | -6π/8 |

| 3 | 0 | -5π/8 |

| 4 | 0 | -4π/8 |

| 5 | 0 | -3π/8 |

| 6 | 0 | -2π/8 |

| 7 | 0 | -1π/8 |

| 8 | 0 | 0 |

| 9 | 0 | 1π/8 |

| 10 | 0 | 2π/8 |

| 11 | 0 | 3π/8 |

| 12 | 0 | 4π/8 |

| 13 | 0 | 5π/8 |

| 14 | 0 | 6π/8 |

| 15 | 0 | 7π/8 |

To generate a narrower beam and the scanning, the number of antennas in the array can be extended from 2 to 8. The antenna spacing is still half of the wavelength. All antennas are in one line. This kind of array topology is called ULA: Uniform Linear Array. Regarding the phasing scheme: the 1st antenna’s phase is kept 0, and starting from the 2nd antenna the phasing step sizes are π/8, 2π/8, 3π/8, …, 7π/8. You can check test_linear8_bf_scan.py to see the exact beamforming phase vector with 8 elements (argument beamforming_vec_rad). The corresponding command and scanning figure are:

python3 test_linear8_bf_scan.py

The corresponding phases per antenna are:

| time | ant0 | ant1 | ant2 | ant3 | ant4 | ant5 | ant6 | ant7 |

|---|---|---|---|---|---|---|---|---|

| 0 | 0 | -π | -2π | -3π | … | … | … | -7π |

| 1 | 0 | -7π/8 | -2*7π/8 | -3*7π/8 | … | … | … | -7*7π/8 |

| 2 | 0 | -6π/8 | -2*6π/8 | -3*6π/8 | … | … | … | -7*6π/8 |

| 3 | 0 | -5π/8 | -2*5π/8 | -3*5π/8 | … | … | … | -7*5π/8 |

| 4 | 0 | -4π/8 | -2*4π/8 | -3*4π/8 | … | … | … | -7*4π/8 |

| 5 | 0 | -3π/8 | -2*3π/8 | -3*3π/8 | … | … | … | -7*3π/8 |

| 6 | 0 | -2π/8 | -2*2π/8 | -3*2π/8 | … | … | … | -7*2π/8 |

| 7 | 0 | -1π/8 | -2*1π/8 | -3*1π/8 | … | … | … | -7*1π/8 |

| 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 9 | 0 | 1π/8 | 2*1π/8 | 3*1π/8 | … | … | … | 7*1π/8 |

| 10 | 0 | 2π/8 | 2*2π/8 | 3*2π/8 | … | … | … | 7*2π/8 |

| 11 | 0 | 3π/8 | 2*3π/8 | 3*3π/8 | … | … | … | 7*3π/8 |

| 12 | 0 | 4π/8 | 2*4π/8 | 3*4π/8 | … | … | … | 7*4π/8 |

| 13 | 0 | 5π/8 | 2*5π/8 | 3*5π/8 | … | … | … | 7*5π/8 |

| 14 | 0 | 6π/8 | 2*6π/8 | 3*6π/8 | … | … | … | 7*6π/8 |

| 15 | 0 | 7π/8 | 2*7π/8 | 3*7π/8 | … | … | … | 7*7π/8 |

(To be continued …)

]]>The commonly used wavelength of red light in double-slit interference is approximately 620 to 750 nanometers, corresponding to a frequency range of 484 to 400 THz. Here, we take a wavelength of 700 nanometers, which corresponds to a frequency of 428.6 THz. In double-slit interference experiments, it is generally required that the distance between the two slits be less than 1mm. Here, we take 0.5mm, which is about 714 times the wavelength. In this setup, the two slits correspond to the two antennas in our model. To set these parameters in our simulation code, the command is as follows:

python3 -c "from beamforminglib import *; ant_array_beam_pattern(freq_hz=428.6e12, array_style='linear', num_ant=2, ant_spacing_wavelength=714, angle_vec_degree=np.arange(-1, 1, 0.0001))"

The parameter np.arange(-1, 1, 0.0001) at the end means that we are observing the beam within a range of -1 degree to +1 degree (which is near 0 degrees, where the light is shooting from left to right towards the double slits/antennas). Within the -1 to +1 degree range, the step size for observing angles is 0.0001 degrees. Running the above Python program yields the following result:

In the image above, the narrow blue region extending to the right represents the beam simulating light emerging from the two slits. The question is whether there are “interference pattern” within this beam. To facilitate zooming in and out using the mouse in a matplotlib plot, let’s redraw the above image in a Cartesian coordinate system:

python3 -c "from beamforminglib import *; ant_array_beam_pattern(freq_hz=428.6e12, array_style='linear', num_ant=2, ant_spacing_wavelength=714, angle_vec_degree=np.arange(-1, 1, 0.0001), plot_in_polar=False)"

By further zooming in on the image above using the mouse, we obtain the following image:

As we can see, a large number of narrow beams are observed. These very narrow “light beams” hitting the screen showing the interference pattern! The farther the screen is, the larger the spacing between the bright lines. The light is strongest directly to the right (0-degree direction) because there is no path/phase difference from the two slits to this position. In some directions deviating from 0 degrees, strong light/beams appear again because the path/phase difference to the two slits in those directions is already large enough to be an integer multiple of the wavelength, causing the waves to reinforce each other again – imagine sine waves with a periodic difference of integer multiples of 2π, which is the same as at 0 degree.

Since the strongest beam at 0 degrees is due to the absence of a phase difference, what would happen if we used a method (such as placing a special glass in front of one of the slits) to introduce a phase/delay of half a wavelength more for the light coming out of one slit compared to the other? Would the waves cancel each other out at 0 degrees? To verify this idea, it’s just a matter of issuing a single command:

python3 -c "from beamforminglib import *; ant_array_beam_pattern(freq_hz=428.6e12, array_style='linear', num_ant=2, ant_spacing_wavelength=714, angle_vec_degree=np.arange(-1, 1, 0.0001), plot_in_polar=False, beamforming_vec_rad=np.array([0, np.pi]))"

The last parameter in the above command introduces delays/phases of 0 and π for the light coming from the two slits. Since 2π represents a full period/wavelength, π is half a period/wavelength. The distribution of the beam with this phase difference is shown in the image below (a manually zoomed-in section of the plot from the above command using the mouse):

This time, there is no beam/bright-line at the 0-degree direction (directly to the right). The beam/bright-line have shifted to angles on both sides of 0 degrees. If there is a screen to the right, one would observe that the beam/bright-line have shifted.

(To be continued …)

]]>

The image above shows the world-famous double-slit experiment, which has been known for over 200 years. It served as perfect proof that light is a wave, thoroughly refuting Newton’s particle theory. Even today, many people still debate over its quantum mechanical explanations. Interestingly, this experiment has a close connection to the beamforming technology we use daily in Wi-Fi/4G/5G networks.

Firstly, what is a beam?

Imagine the beam of light emitted from a flashlight. Abstractly, it’s the electromagnetic waves (the receiver’s sensitivity to electromagnetic waves) being stronger in one direction and weaker in others, thus forming a beam.

Starting with the conventional “spherical chicken in a vacuum,” if a wave source radiates uniformly in all directions, it forms an “omnidirectional” beam. This leads to two essential parameters needed to define a beam: direction and strength.

To explain these two aspects, for convenience, let’s use a two-dimensional model by applying a dimensional reduction to the “spherical chicken in a vacuum.” We will consider the beam pattern of the “rod” antenna commonly used in Wi-Fi routers in the horizontal direction.

Imagine looking down from above at the horizontal plane around the antenna shown in the previous image. In this overhead view, the antenna appears as a small circle. If the antenna radiates uniformly in all horizontal directions, its beam pattern on the horizontal plane would look like the following image:

In the diagram, the range from 0 to 360 degrees represents the various directions indicated by the theta angle in the previous image. The red circle at the center is how the antenna appears in the overhead view, and the number 1 on the blue circle indicates that the radiation strength is 1 in all theta angle directions. The value 1 can be understood as an ideal reference unit (corresponding to a gain of 0dB, but due to losses, it generally doesn’t reach 0dB; for simplicity, we’ll ignore the losses for now). The command to draw the above result is as follows:

git clone https://github.com/JiaoXianjun/sdrfun.git

cd sdrfun/beamforming/python/

python3 -c "from beamforminglib import *; ant_array_beam_pattern(freq_hz=2450e6, array_style='linear', num_ant=1, ant_spacing_wavelength=0.5)"

The parameters mean: 2.45GHz carrier frequency, linear array, 1 antenna, antenna spacing of 0.5 wavelength.

Let’s add a second antenna, with the two antennas spaced 6.1cm apart, which is half the wavelength of the 2.45GHz frequency electromagnetic wave:

Then the beam pattern produced by these two antennas would look like the following image:

As can be seen, in the 0 and 180 degree directions, the radiation from the antennas has doubled, going from 1 to 2 (which corresponds to a 3dB gain), while in the 90 and 270 degree directions, the radiation disappears, becoming 0. The beam pattern seems an “8” shape. The corresponding command to draw the result (the only changed parameter is the number of antennas, from 1 to 2) is as follows:

python3 -c "from beamforminglib import *; ant_array_beam_pattern(freq_hz=2450e6, array_style='linear', num_ant=2, ant_spacing_wavelength=0.5)"

This is quite interesting. While the addition of multiple antennas is intended to improve the signal, it unexpectedly makes the signal worse in some directions (the directions where the blue “8” shape is less than 1). It seems that effectively using multiple antennas in Wi-Fi routers is not easy/straight-forward, and we’ll discuss the multi-antenna setup of Wi-Fi routers in a later article.

Returning to the “8” shaped beam pattern above, it can actually be easily explained: each antenna still radiates omnidirectionally, but the superposition of the electromagnetic waves from the two antennas in space results in different strengths in different directions. For example, in the 0/180 degree directions, the electromagnetic waves from the two antennas remain strictly in sync with when they left the antennas, because the distance from each point to the two antennas is the same in these directions, meaning the electromagnetic waves from the two antennas maintain the same phase. Imagine two sine waves with the same phase being superimposed; naturally, they become stronger. In the 90/270 degree directions, however, the distance from each point to the two antennas differs by half a wavelength (the antenna spacing), which means there is a 180-degree phase difference, completely opposite. Imagine two sine waves with a 180-degree phase difference being superimposed; of course, they cancel each other out. In the directions between these two, the situation is between the two extremes.

The situation where two sine waves are superimposed with different phases is illustrated in the following image:

The command to draw the above results is as follows (please manually modify the phase difference parameter offset_degree in the script):

python3 test_sine_offset_combine.py

It shows the superposition of two sine waves with phase differences of 0 degrees, 60 degrees, 120 degrees, and 180 degrees, respectively. You can draw the situation with different phase differences by modifying the offset_degree variable in the script test_sine_offset_combine.py.

Now, the question is: using the same radio wave beamforming code, if we set it to the parameters of double-slit interference in the case of light, can we reproduce the double-slit interference of light? Let’s start experimenting.

(To be continued …)

]]>The open-source BTLE (Bluetooth Low Energy) baseband chip design

Xianjun Jiao, 2024.

SPDX-FileCopyrightText: 2024 Xianjun Jiao

SPDX-License-Identifier: Apache-2.0 license

- [Summary of reference projects and papers]

- [Summary of the version of main packages]

- [BTLE chip architecture]

- [The overall design and implementation methodology]

- [Prior arts analysis]

- [Introduction of the reference SDR BTLE project and its users]

- [Basic principle of BTLE algorithm and structure of the project files]

- [Align the Python algorithms to the SDR BTLE project]

- [Use Python script to evaluate BER under different clock error]

- [Use Python script and Verilog testbench to simulate the design]

- [Synthesis and Implementation for Xilinx FPGA]

- [Run through OpenLane2 SKY130 PDK flow to generate GDSII]

Summary of reference projects and papers

| Link | Role |

|---|---|

| https://www.bluetooth.com/specifications/specs/core-specification-5-3/ | Core Specification 5.3 is the main reference. Mainly PartA&B of Vol6: Low Energy Controller |

| https://github.com/JiaoXianjun/BTLE | The starting point. Created ~10 years ago by me. The new design files are in BTLE/python and BTLE/verilog directories |

| https://colab.research.google.com/github/efabless/openlane2/blob/main/notebook.ipynb | The OpenLane2 work flow I learnt/copied |

| https://github.com/halftop/Interface-Protocol-in-Verilog | general_uart is used for HCI (Host Controller Interface) |

| https://github.com/KennethWilke/sv-dpram | Dual port ram in Verilog (modified in this project) |

| https://public.ccsds.org/Pubs/413x0g3e1.pdf | Figure 3-3: GMSK Using a Quadrature Modulator – The GFSK modulation method adopted in this project |

| https://research.utwente.nl/en/publications/bluetooth-demodulation-algorithms-and-their-performance | Fig. 6. Phase-shift discriminator – The GFSK demodulation method adopted in this project |

Summary of the version of main packages

| Packages | Version |

|---|---|

| Ubuntu | 22.04.4 LTS 64bit |

| libhackrf-dev | amd64/jammy 2021.03.1-2 uptodate |

| Icarus Verilog | version 12.0 (stable) (s20221226-498-g52d049b51) |

| cmake | version 3.22.1 |

| build-essential | amd64/jammy 12.9ubuntu3 uptodate |

| Python | 3.10.12 |

| numpy | Version: 1.21.5 |

| matplotlib | Version: 3.5.1 |

| BTLE commit | https://github.com/JiaoXianjun/BTLE/commit/ff4f2cf17e7a7cd91db6326edd24fa7128a5d945 |

| Xilinx Vivado | 2021.1 |

| openlane | 2.0.0rc2 |

| sky130 PDK | bdc9412b3e468c102d01b7cf6337be06ec6e9c9a |

Introduction

The open-source chip design is a hot topic in recent years. Across big companies to enthusiasts, lots of efforts have been put in multiple domains: high level instruction set definition (RISC-V), new HDL (Hardware Description Language, such as Chisel and SpinalHDL), open chip design (Rocket, BOOM), open EDA (Electronic Design Automation) tools (Yosys, OpenLane) and open PDK (Process Design Kit, such as SkyWater 130). These efforts inspired many active projects in the area of CPU (Central Processing Unit) and MCU (Micro Controller Unit) design. However open-source designs in the radio connectivity domain remain scarce. This project, the open-source BTLE (Bluetooth Low Energy) baseband chip design, aims to establish a foundational project in the domain of open radio connectivity chip design. Regarding the difference between this project and some prior arts, please find the prior art analysis section. As far as I know, this is the 1st open-source BTLE baseband project, that covers all modules from PDU to IQ sample, written in Verilog.

The main features and innovative points of this design are:

- Sub set of BTLE core spec v5.3

- LE 1M, with uncoded data at 1 Mb/s

- GFSK (Gaussian Frequency Shift Keying) with BT(Bandwidth-bit period product)=0.5

- Modulation index 0.5

- Preamble has 1 octet

- Access address has 4 octets

- PDU (Protocol Data Unit) has 2-39 octets

- CRC (Cyclic Redundancy Check) has 3 octets

- BER (Bit Error Rate) performance

- With max 50PPM clock error, BER 0.1% @ 24.5dB SNR

- With 20PPM clock error, BER 0.1% @ 11.5dB SNR

- Configurable gauss filter taps – Flexible bandwidth/spectrum-shape

- Support non-standard BT value or other phase smoothing strategy, such as GMSK (Gaussian Minimum Shift Keying).

- Configurable COS and SIN table – Flexible modulation index

- Support non-standard frequency deviation

- 16MHz main clock speed. 8x oversampling in both transmitter and receiver

- oversampling rate is customizable in design time

The rest part of the document is organized into these sections:

- BTLE chip architecture

- The overall design and implementation methodology

- Prior arts analysis

- Introduction of the reference SDR BTLE project and its users

- Basic principle of BTLE algorithm and structure of the project files

- Align the Python algorithms to the SDR BTLE project

- Use Python script to evaluate BER under different clock error

- Use Python script and Verilog testbench to simulate the design

- Synthesis and Implementation for Xilinx FPGA

- Run through OpenLane2 SKY130 PDK flow to generate GDSII

BTLE chip architecture

(It is assumed that the audiences already have basic knowledge of BTLE. If not, please check quickly the references in the SDR BTLE project: https://github.com/JiaoXianjun/BTLE/tree/master/doc)

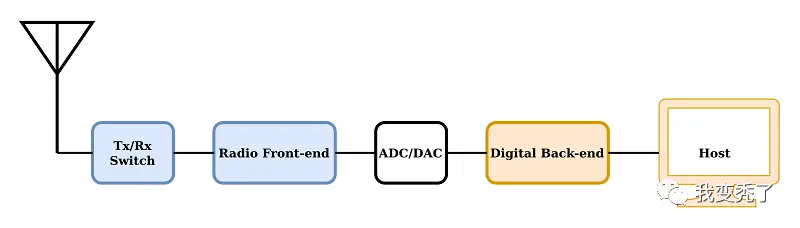

Introduce what does the “BTLE chip” mean:

- BTLE core spec v5.3 Vol1 PartA Section2: “The Bluetooth Core system consists of a Host and a Controller”.

- Vol4, HCI (Host Controller Interface) is defined over several options, such as UART (Universal Asynchronous Receiver-Transmitter), USB (Universal Serial Bus), etc.

- Vol6, the LE (Low Energy) Controller is composed of Physical Layer and Link Layer.

- The Physical Layer is responsible for GFSK modulation/demodulation till RF (Radio Frequency) and antenna.

- The Link Layer includes: packet composing/decomposing; control logic (protocol).

- In this project, BTLE chip refers to the LE controller.

This project implements the LE controller that has Physical Layer and Link Layer, except that:

- ADC(Analog to Digital Converter)

- DAC(Digital to Analog Converter)

- zero-IF (Intermediate Frequency) analog transceiver

In other words, this project focuses on the baseband part, not RF.

The control logic in the Link Layer and the HCI currently are dummy modules due to the limited development time. The dummy module is suitable to be implemented by a simple MCU, such as some RISC-V project.

The overall design and implementation methodology

In this project the open BTLE baseband is implemented in Verilog. The baseband bit-true algorithm model is implemented and verified in Python. The Python scripts are also used to generate test vectors and reference results for Verilog modules. The Python algorithm is based on and aligned with the parent project: BTLE – The open-source SDR (Software Defined Radio) Bluetooth Low Energy, which was created by me about 10 years ago. The SDR BTLE project is written in C language and can communicate with the commercial BTLE devices (Phone, Pad, etc.) via SDR hardwares (HackRF, BladeRF). It works not only in the fixed frequency advertisement channel 37/38/39, but also can track the frequency hopping data channel traffic. This means the completeness of the protocol implementation is high in the reference SDR BTLE project. The Python scripts functionalities are aligned with the SDR BTLE project, so the Python scripts are trustworthy. The Verilog testbench takes the test vectors and the reference result vectors generated by Python scripts, and simulate the functionality of the whole design in iverilog, so the Verilog design’s correctness is guaranteed. The SDR BTLE C program, the Python scripts and the Verilog testbench exchange the test vectors and reference results via files in the operating system. You will see this validation chain throughout this document later on.

Prior arts analysis

-

Bluetooth Low Energy Baseband, 2018, is a classroom project. It is written in Chisel, and only includes the bit level processing (scrambling and CRC) of the LE Link Layer. GFSK modulation and demodulation is not included. It is far from a complete LE baseband.

-

Low Power Bluetooth Baseband with a RISC-V Core for Autonomous Sensors using Chisel, 2022-10-13, is a master thesis work that writes some BTLE baseband functionalities in Chisel. Like the previous classroom project, this project also mainly implements the bit level processing (scrambling and CRC). GFSK modulation and demodulation is not included. It is far from a complete LE baseband.

-

A Bluetooth Low Energy Radio using FPGA SERDES: No ADC, AGC, filters, mixers, or amplifiers required., 2021, is the BTLE receiver and transmitter written in nmigen HDL. It has learnt and acknowledged my SDR BTLE project at the end of README. The main unique point of the project is implementing a kind of “Sigma Delta Modulation” with the high speed SERDES differential lines on the FPGA to create an ADC and DAC. It is a kind of experimental work mainly for demonstrating the unique idea of using FPGA SERDES as SDR radio frontend. The full support/testing of every corner of the BTLE protocol is not the main consideration. For example, it supports only fixed preamble and access address patterns for advertisement channels, and does not support the data channel packet transmission and reception. As a comparison, my SDR BTLE project targets to create a full/complete baseband that works not only for advertisement channels but also data channels. Not like the experimental SERDES idea, my project works with the mature zero-IF analog transceiver.

-

A Fully Synthesizable Bluetooth Baseband Module for a System-on-a-Chip, 2003, ETRI Journal, Volume 25, Number 5, October 2003, is for Bluetooth instead of Bluetooth LE (BT classical and LE are different). The paper is only for result reporting, not open sourcing.

Introduction of the reference SDR BTLE project and its users

The SDR BTLE project was created by me about 10 years ago. It implements the BTLE stack in C language and can communicate with the COTS (Commercial Off The Shelf) devices via SDR boards, such as HackRF and BladeRF. The capability includes not only advertisement packet processing in the fixed frequency channel but also data packet in the frequency hopping channels. Since it was created, it has been used in multiple projects and academic papers. This track record brings people confidence if it is the starting point of the open BTLE chip design.

Some example works that are based on my SDR BTLE project:

-

Bidirectional Bluetooth Backscatter With Edges, 2024 IEEE Transactions on Mobile Computing (Volume: 23, Issue: 2, February 2024). In this work the SDR BTLE acts as a core module in the SDR edge server (with HackRF) to setup a bidirectional link between the BTLE reader and the BTLE backscatter tag.

-

Bluetooth Low Energy with Software-Defined Radio Proof-of-Concept and Performance Analysis, 2023 IEEE 20th Consumer Communications and Networking Conference (CCNC). In this work, the USB based SDR devices (such as HackRF, BladeRF) in the SDR BTLE project are replaced by PCIe based SDR devices to achieve the accurate IFS (Inter Frame Space) timing requirement of the BTLE standard. The BTLE stack on the host PC is based on the SDR BTLE project.

-

Tracking Anonymized Bluetooth Devices, 2019, Proceedings on Privacy Enhancing Technologies. This work uses the SDR BTLE project to access the low level raw PHY bit of the BTLE packet, then discovers the vulnerability of BTLE MAC address randomization mechanism. The author says that normally some low level information/PHY-header are discarded by the ASIC/COTS BTLE chip, so SDR is a better choice for BTLE security research. The counter measure was also proposed.

-

Snout: A Middleware Platform for Software-Defined Radios, 2023, IEEE Transactions on Network and Service Management (Volume: 20, Issue: 1, March 2023). This paper implemented a middleware platform that supports many SDR programs and platforms. the btle_rx program (the receiver program in the SDR BTLE project package) is integrated as a receiver example.

-

Bluetooth Low Energy Receiver of portapack-mayhem, 2023. At the end of the page, it says Reference Code Used in Porting Protocol: https://github.com/JiaoXianjun/BTLE . The Portpack is a very popular “HAT” that can be integrated with HackRF SDR board, and run the SDR fully in embedded/hand-held style. The firmware has integrated lots of SDR applications. The BTLE application is based on my SDR BTLE project.

-

Unveiling IoT Vulnerabilities: Exploring Replay Attacks on BLE Devices, 2024. In this experiment, the btle_tx program (the transmitter program in the SDR BTLE project package) is used to generate the attack packet. The purpose is using the SDR device to pretend any other BTLE devices.

-

Security Fuzz Testing Framework for Bluetooth Low Energy Protocols, 2019, Communications of the CCISA, Regular Paper, (Vol 25, No 1, Feb 2019), TAIWAN. This paper integrates the btle_tx program for fuzzing (malformed) packet generation in their BTLE fuzzing test framework.

-

Bluetooth Security, 2018, Book Chapter, pp 195-226, First Online: 20 March 2018. In this chapter of the book “Inside Radio: An Attack and Defense Guide”, the SDR BTLE project is introduced as a tool for security research.

Basic principle of BTLE algorithm and structure of the project files

According to the section of “BTLE chip architecture”, the BTLE chip actually refers to the LE controller. The controller is composed of two modules: Link Layer and Physical Layer. The following figure shows the big picture of BTLE operation principle, and involved modules with Verilog file name in blue (Python function names are similar). It also shows what is included in this project and what is not included. To check how each module work, please refer to seciont “Use Python script and Verilog testbench to simulate the design”

According to the standard, the Link Layer includes the control logic and packet composition/decomposition part. The Physical Layer does the GFSK modulation/demodulation till RF and antenna. The following further elaboration on the operation principle is based on the reference SDR BTLE project, the standard (core spec v5.3) and some other references. The principle is also shown in the above figure.

The packet composition, also called bit level processing, does the CRC24 calculation and pad the 3 CRC octets to the original PDU. Then scramble the PDU to get Physical Layer bits. Before sending it to GFSK modulation in Physical Layer, preamble (1 octet) and access address (4 octets) are padded before the scrambled PDU bit. According to the standard, the scrambling pattern and the preamble is related to the channel number. The CRC initial state and the access address are either fixed (advertisement channel) or negotiated with peers (data channel).

The Physical Layer GFSK modulation’s principle could refer to the “Figure 3-3: GMSK Using a Quadrature Modulator” in CCSDS 413.0-G-3. The basic idea of the implementation is that: bit 0 will drive the input address decrementing for the phase (COS and SIN) table to generate negative frequency deviation; bit 1 will drive the input address incrementing for the phase table to generate positive frequency deviation. The 0-1 transition smoothing is controlled by the coefficients of gauss filter, and this further shapes the spectrum. The max positive/negative frequency achieved is controlled by the waveform samples in the COS&SIN table. In this project, both gauss filter coefficients and COS&SIN table are configurable. This will help the design support multiple standards/purpose beyond BTLE.

The packet decomposition in the Link Layer at the receiver side does the inverse bit level processing of that in the transmitter. It is trivial.

The GFSK demodulation in the Physical Layer at the receiver side uses a simple Signal-to-Bit algorithm (digital baseband version of Fig.6 in https://research.utwente.nl/en/publications/bluetooth-demodulation-algorithms-and-their-performance):

sign(i0*q1-i1*q0).

Where sign() means taking the sign of the result. Positive means 1, negative means 0. i0, q0, i1, q1 are input IQ samples at two successive sampling moments spaced by 1us (according to the LE 1M PHY in the standard)

Above actually detects whether the phase rotates clockwise or counter-clockwise between two successive IQ samples. It starts from:

(i1+q1*j)*conj(i0+q0*j) = i1*i0+q1*q0+(i0*q1-i1*q0)*j

It is easy to understand that the sign of the imaginary part i0*q1-i1*q0 decides the rotation direction.

In our design, 8x oversampling is used in both transmitter and receiver. But the receiver does not know which one out of 8 sampling phases is the best after the signal experiences the circuit/channel propagation delay and the noise. So the receiver will do the GFSK demodulation and inverse bit level processing on all 8 sampling phases, and output the one that gives correct CRC checksum. The oversampling rate is also configurable.

In this project, the HCI UART interface between the controller Link Layer and the host is forked from https://github.com/halftop/Interface-Protocol-in-Verilog . The Link Layer control logic is temporarily a dummy module due to limited development time. In principle, the control logic module can be implemented by some simple RISC-V core.

The project includes two self explaining directories: python and verilog. The naming of those files are well aligned with the BTLE operation principle introduced above.

For Python files,

- btlelib.py has the top level transmitter function btle_tx and receiver function btle_rx. It also has all the sub-functions and some other helper functions, such as clock error emulation and AWGN (Additive White Gaussian Noise) channel.

- btle_tx’s subfunctions: crc24, crc24_core, scramble, scramble_core, gfsk_modulation_fixed_point, vco_fixed_point, etc.

- btle_rx’s subfunctions: gfsk_demodulation_fixed_point, search_unique_bit_sequence, scramble, scramble_core, crc24_core, etc.

- test_alignment_with_btle_sdr.py compares the signal generated by the Python transmitter (by calling btle_tx) and the signal generated by the SDR BTLE project.

- test_btle_ber.py simulates the BER vs SNR (Signal to Noise Ratio) under specified clock frequency error (max 50PPM according to the standard). It calls btle_tx, AWGN channel, cock error emulation, btle_rx, and runs many PDUs to get reliable BER statistics.

- test_vector_for_btle_verilog.py generates test vectors and the reference result vectors for Verilog testbench by using a similar script like test_btle_ber.py. The main difference is that it turns on the file saving flag.

For Verilog files,

- btle_controller.v is the top level module.

- btle_controller.v has Link Layer module btle_ll.v (with dummy control logic) and btle_phy.v, which actually include the Link Layer bit level processing and Physical Layer GFSK modulation and demodulation.

- btle_phy.v has btle_tx.v and btle_rx.v for transmitter and receiver respectively. btle_tx.v is matched to the btle_tx function in btlelib.py. btle_rx.v is matched to the btle_rx function in btlelib.py.

- btle_tx.v has submodules which are well aligned with the phython functions.

- btle_rx.v has 8 bele_rx_core.v instances via the “generate” method of Verilog to act as 8 parallel decoders working on 8 different sampling phases. Any of the 8 decoders has the correct CRC, the receiver will end working on the current packet. There is also a timeout logic to ensure the receiver will not work endlessly.

- btle_rx_core.v has submodules which are well aligned with the phython functions.

- module/submodule specific Verilog testbench file is module_name_tb.v.

- Though the top level module btle_controller.v has a UART interface, currently the UART is not used. The testbench btle_controller_tb.v uses the exposed raw Link Layer bit interface passing through the dummy Link Layer control logic.

Align the Python algorithms to the SDR BTLE project

(If you don’t like local installation, just upload and run open_btle_baseband_chip.ipynb in google colab. This readme is for local setup.)

Install necessary libs, download the open BTLE chip design (python and verilog directory) and build the reference SDR BTLE project.

sudo apt install libhackrf-dev

sudo apt install iverilog

git clone https://github.com/JiaoXianjun/BTLE.git

cd BTLE/host/

mkdir -p build

cd build/

cmake ../

make

You should see messages of successful builing like:

...

[ 75%] Building C object btle-tools/src/CMakeFiles/btle_rx.dir/btle_rx.c.o

[100%] Linking C executable btle_rx

[100%] Built target btle_rx

Run SDR BTLE project to generate IQ sample of a BTLE packet and save it to phy_sample.txt

cd ../../python/

../host/build/btle-tools/src/btle_tx 10-LL_DATA-AA-11850A1B-LLID-1-NESN-0-SN-0-MD-0-DATA-XX-CRCInit-123456

Do not worry about the failure due to lacking hardware.

Run test_alignment_with_btle_sdr.py to call Python algorithm generating IQ sample, then calculate realtime frequency offset for the SDR BTLE generated signal (phy_sample.txt) and the Python generated signal (by calling btle_tx in btlelib.py). Save the frequency offset result to btle_fo.txt and python_fo.txt for further plotting and comparison.

python test_alignment_with_btle_sdr.py 2

You should see outputs like:

argument: example_idx

2

Plese run firstly:

../host/build/btle-tools/src/btle_tx 10-LL_DATA-AA-11850A1B-LLID-1-NESN-0-SN-0-MD-0-DATA-XX-CRCInit-123456

and figure like:

Please noticed that “2” is given as argument to the script. It means the example index is 2. The Python script also gives hint of this example related btle_tx command line ../host/build/btle-tools/src/btle_tx 10-LL_DATA-AA-11850A1B-LLID-1-NESN-0-SN-0-MD-0-DATA-XX-CRCInit-123456. This command has run before. Though hardware does not exist, the IQ sample is still generated and saved to phyt_sample.txt. The command also shows some information during the packet generation:

...

before crc24, pdu

0100

after crc24, pdu+crc

01009b8950

after scramble, pdu+crc

9bc14d4c14

...

The argument 10-LL_DATA-AA-11850A1B-LLID-1-NESN-0-SN-0-MD-0-DATA-XX-CRCInit-123456 given to btle_tx means that it is a data packet in channel 10 with access address 0x11850A1B and CRC initial state 0x123456. Regarding the exact meaning of the argument of btle_tx, please refer to the project README https://github.com/JiaoXianjun/BTLE/blob/master/README.md

If you open the test_alignment_with_btle_sdr.py and find out the initialization code for example 2, you will see

print('Plese run firstly:')

print('../host/build/btle-tools/src/btle_tx 10-LL_DATA-AA-11850A1B-LLID-1-NESN-0-SN-0-MD-0-DATA-XX-CRCInit-123456')

channel_number = 10 # from the 1st field in above btle_tx command argument

access_address = '1B0A8511' # due to byte order, the 11850A1B in above argument needs to be 1B0A8511

crc_state_init_hex = '123456' # from the CRCInit field in above btle_tx command argument

crc_state_init_bit = bl.hex_string_to_bit(crc_state_init_hex) # from the CRCInit field in above btle_tx command argument

pdu_bit_in_hex = '0100' # from the output of above btle_tx command

These are aligned with the argument and output of btle_tx program. This is the way of how SDR BTLE and phython take the same input. Only when the input are the same, comparison of the output is meaningful.

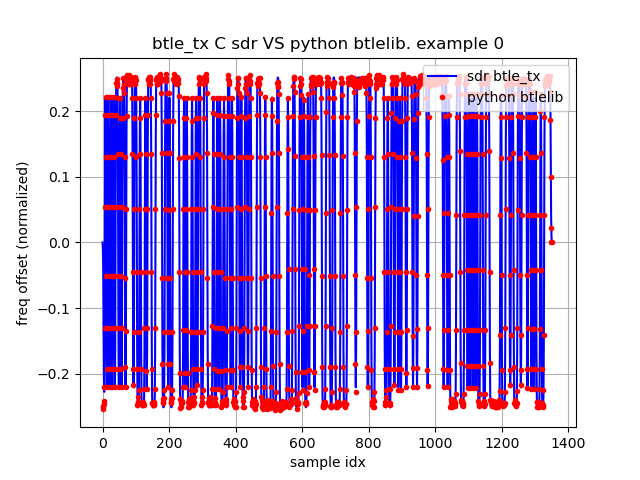

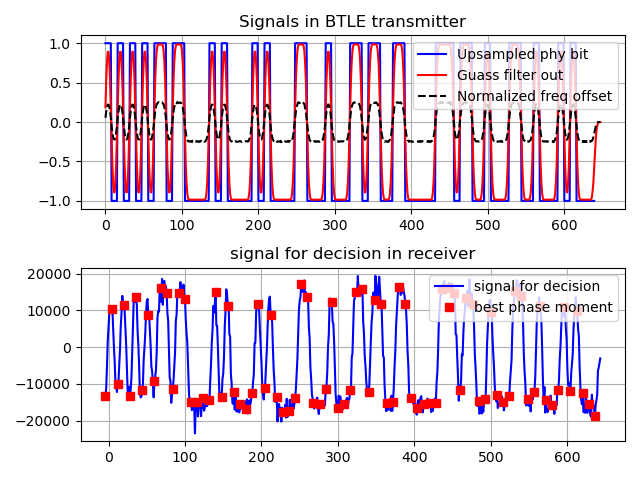

In the above figure the Python btlelib.py output (red) is well aligned with the SDR BTLE btle_tx output. The minor differences happen at the ramping up area (at the beginning) and the max frequency deviation area (around +/-0.25). It is due to the fact that the Python scripts use slightly different oversampling rate, gauss filter coefficients and COS&SIN table. But they both meet the BTLE standard requirements.

On page 2641 of the BTLE core spec v5.3, it says “The minimum frequency deviation shall never be less than 185 kHz when transmitting at 1 megasymbol per second (Msym/s) symbol rate”. The above figure shows the normalized frequency offset with regards to 1Msym/s. The normalized frequency offset of 185kHz is 185KHz/1Msym/s = 0.185. In the figure, the max frequency deviation during each bit period is always bigger than 0.2 which is larger than the minimum requirement 0.185 in the standard.

Now let’s run example 0 which has a longer packet in advertisement channel number 37.

../host/build/btle-tools/src/btle_tx 37-DISCOVERY-TxAdd-1-RxAdd-0-AdvA-010203040506-LOCAL_NAME09-SDR/Bluetooth/Low/Energy r500

Then run test_alignment_with_btle_sdr.py with example index 0 and compare the Python and SDR BTLE result. Again they are aligned.

python test_alignment_with_btle_sdr.py 0

Use Python script to evaluate BER under different clock error

After we have verified Python btle_tx model/algorithm, the corresponding Python receiver algorithm is designed and implemented in btlelib.py. Please find the basic idea of the receiver algorithm in the section “Basic principle of BTLE algorithm and structure of the project files”

Then the next step is evaluating the BER (Bit Error Rate) performance of the receiver algorithm before going to the Verilog implementation. The BER performance is mainly affected by the AWGN noise, clock frequency error between the transmitter and the receiver. The multipath effect is not dominant here because the BTLE is basically a narrow band system. The related requirements in the standard (core spec v5.3) are listed:

- “3.1 MODULATION CHARACTERISTICS: The symbol timing accuracy shall be better than ±50 ppm.”

- “4 RECEIVER CHARACTERISTICS: The reference sensitivity level referred to in this section is -70 dBm”

- “4.1 ACTUAL SENSITIVITY LEVEL: Maximum Supported Payload Length (bytes) 1 to 37, BER 0.1%. LE Uncoded PHYs, Sensitivity (dBm) <= -70”

For nowadays hardware quality, the above requirement is not tough. The crystal could reach PPM performance well below 50PPM easily. At -70dBm RSSI (Received Signal Strength Indicator) level, SNR could reach above 25dBm easily.

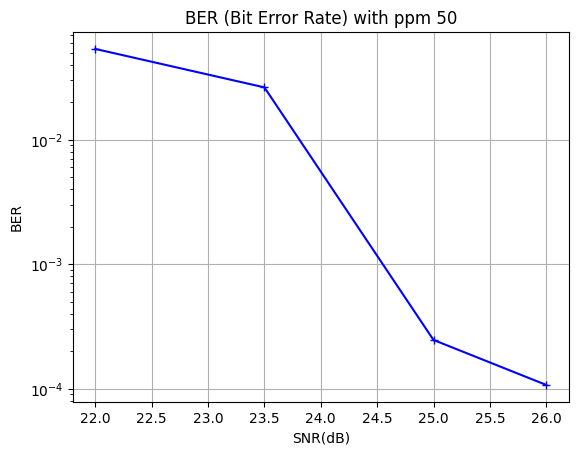

The Python script test_btle_ber.py constructs the simulation chain of btle_tx, AWGN channel and clock error emulation, btle_rx and BER statistics. It runs 300 packets with random content to achieve stable BER results. The packet has the maximum 37 octets in the PDU payload field, and 39 octets (2 octet header) in total for the PDU. It means 300*39*8 = 93600 bits passing through at each SNR point. There will be 93.6 error bits for BER 0.1%. Normally having around 100 error bits is regarded as sufficient.

Now let’s run a worst 50PPM case by giving 50 as argument to the test_btle_ber.py, and plot the BER-SNR curve. It will take a while.

python test_btle_ber.py 50

During the simulation, some realtime info is shown: ppm value, frequency offset (50PPM –> 122.5KHz under 2.45GHz center frequency of 2.4GHz ISM band), BER and related the number of error bits, etc.

The figure shows that BER 0.1% is achieved around SNR 24.5dB, which is easy to have with RSSI -70dBm. This means the receiver algorithm meets the standard requirements on BER/sensitivity.

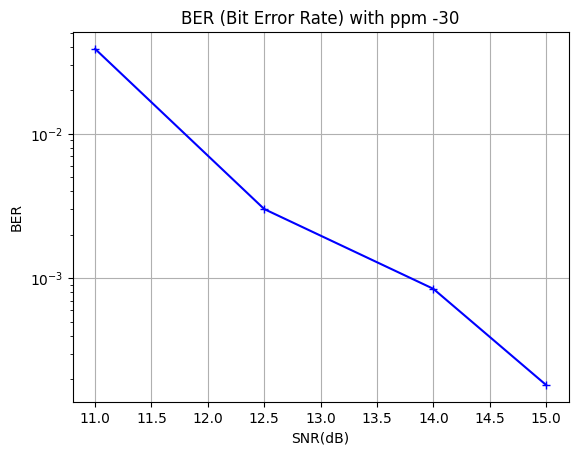

Let’s simulate another better case: -30PPM.

python test_btle_ber.py -30

From the above figure, BER 0.1% is achieved around SNR 13.5dB. In modern hardware, 30PPM is an easy task for a crystal, and it already can bring big gain on the sensitivity.

Use Python script and Verilog testbench to simulate the design

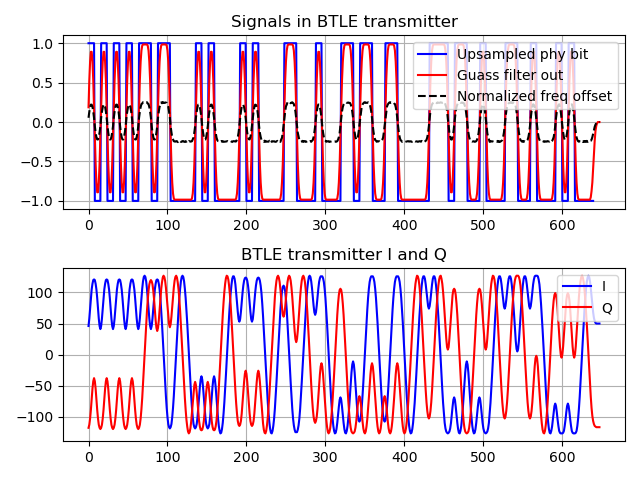

Run test_vector_for_btle_verilog.py to generate test vectors and reference results into the verilog directory. The script also shows the input and output waveforms of some key Python/Verilog modules.

python test_vector_for_btle_verilog.py 2

In above example, “2” is input as argument to test_vector_for_btle_verilog.py. It is the same example index as introduced in the section “Align the Python scripts to the SDR BTLE project”.

The full argument list for test_vector_for_btle_verilog.py is: example_idx snr ppm_value num_sample_delay. The name is self explained.

The figure shows that:

- The upsampled PHY bit (PDU bit after CRC and scramble), which is in blue squared NRZ waveform, is shown in the top figure.

- After the gauss filter, the PHY bit NRZ waveform is smoothed, and shown in red.

- Then the gauss filter output drives the GFSK modulator to generate the IQ (COS, SIN) sample which is shown as I (red) and Q (blue).

- The IQ sample related normalized frequency offset is shown in the dashed black curve in the top figure. It is well aligned with the PHY bit and gauss filter output waveform.

- The bottom figure shows the output of GFSK demodulator (algorithm:

sign(i0*q1 - i1*q0)) in blue, and the best sampling phase (out of 8 possible phases) that gives correct CRC, in red square.

Now let’s run the top level Verilog testbench btle_controller_tb.v which takes the Python transmitter input&output and the Python receiver input&output as test vectors.

cd ../verilog/

iverilog -o btle_controller_tb btle_controller_tb.v btle_controller.v btle_ll.v uart_frame_rx.v uart_frame_tx.v rx_clk_gen.v tx_clk_gen.v btle_phy.v btle_rx.v btle_rx_core.v gfsk_demodulation.v search_unique_bit_sequence.v scramble_core.v crc24_core.v serial_in_ram_out.v dpram.v btle_tx.v crc24.v scramble.v gfsk_modulation.v bit_repeat_upsample.v gauss_filter.v vco.v

vvp btle_controller_tb

You should see outputs like:

...

rx Save output to btle_rx_test_output.txt

rx crc_ok flag verilog 1 python 1

rx Test PASS.

rx compare the btle_rx_test_output_mem and the btle_rx_test_output_ref_mem ...

rx 0 difference found

TEST FINISH.

tx test_ok 1

rx test_ok 1

btle_controller_tb.v:605: $finish called at 391031250 (1ps)

Notice that the testbench also emulates the configuration behaviors (before sending packet) to show the runtime flexibility, such as writing the gauss filter coefficient memory and COS&SIN table, that were generated by Python scripts, into the related memory areas inside the controller.

The Verilog testbech btle_controller_tb.v compares the Verilog output with the reference Python output and gives PASS/FAIL indication at the end. From the above testbench output, the test result is PASS.

The testbench of almost all submodules, that compose the BTLE controller, are offered:

- crc24_tb.v

- scramble_tb.v

- bit_repeat_upsample_tb.v

- gauss_filter_tb.v

- vco_tb.v

- gfsk_demodulation_tb.v

- search_unique_bit_sequence_tb.v – here the unique bit sequence is referring to the access address

- btle_rx_core_tb.v

- btle_tx_tb.v

- btle_rx_tb.v

At the beginning of each Verilog module_tb.v file, there are detailed instructions about how to generate the test vectors and run the testbench.

Some submodule testbench examples will be shown in the following part.

Run the CRC 24 testbench:

iverilog -o crc24_tb crc24_tb.v crc24.v crc24_core.v

vvp crc24_tb

Outputs:

VCD info: dumpfile crc24_tb.vcd opened for output.

WARNING: crc24_tb.v:46: $readmemh(btle_config.txt): Not enough words in the file for the requested range [0:31].

CRC_STATE_INIT_BIT 123456

Reading input from btle_tx_crc24_test_input.txt

56 read finish for test input.

Reading output ref from btle_tx_crc24_test_output_ref.txt

80 read finish for test output ref.

56 input

80 output

Save output to btle_tx_crc24_test_output.txt

Compare the crc24_test_output_mem and the crc24_test_output_ref_mem ...

0 error found! Test PASS.

crc24_tb.v:164: $finish called at 86406250 (1ps)

Above testbench shows that 24 bits are padded after the original packet, and the bits are equal to those generated by the Python scripts.

Run the testbench of VCO (Voltage Controlled Oscillator), which has the COS&SIN table as the last stage of GFSK modulator in the transmitter btle_tx:

iverilog -o vco_tb vco_tb.v vco.v dpram.v

vvp vco_tb

Outputs:

VCD info: dumpfile vco_tb.vcd opened for output.

656 read finish for test input.

Reading output ref from btle_tx_vco_test_output_cos_ref.txt

656 read finish for test output cos ref.

Reading output ref from btle_tx_vco_test_output_sin_ref.txt

656 read finish for test output sin ref.

cos sin table initialized.

656 input

656 output

Save output cos to btle_tx_vco_test_output_cos.txt

Save output sin to btle_tx_vco_test_output_sin.txt

Compare the vco_test_output_cos_mem and the vco_test_output_cos_ref_mem ...

0 error found! output cos Test PASS.

Compare the vco_test_output_sin_mem and the vco_test_output_sin_ref_mem ...

0 error found! output sin Test PASS.

vco_tb.v:223: $finish called at 214218750 (1ps)

Run the full receiver btle_rx testbench:

iverilog -o btle_rx_tb btle_rx_tb.v btle_rx.v btle_rx_core.v gfsk_demodulation.v search_unique_bit_sequence.v scramble_core.v crc24_core.v serial_in_ram_out.v dpram.v

vvp btle_rx_tb

Outputs:

VCD info: dumpfile btle_rx_tb.vcd opened for output.

WARNING: btle_rx_tb.v:61: $readmemh(btle_config.txt): Not enough words in the file for the requested range [0:31].

CHANNEL_NUMBER 10

CRC_STATE_INIT_BIT 123456

ACCESS_ADDRESS 11850a1b

Reading input I from btle_rx_test_input_i.txt

656 read finish for test input I.

Reading input Q from btle_rx_test_input_q.txt

656 read finish for test input Q.

Reading output crc_ok ref from btle_rx_test_output_crc_ok_ref.txt

1 read finish from btle_rx_test_output_crc_ok_ref.txt

Reading output ref from btle_rx_test_output_ref.txt

2 read finish from btle_rx_test_output_ref.txt

ACCESS_ADDRESS 11850a1b detected

payload_length 0 octet

best_phase idx (among 8 samples) 4

crc_ok 1

656 NUM_SAMPLE_INPUT

1456 sample_in_count

Save output to btle_rx_test_output.txt

crc_ok flag verilog 1 python 1

Test PASS.

Compare the btle_rx_test_output_mem and the btle_rx_test_output_ref_mem ...

0 difference found

btle_rx_tb.v:232: $finish called at 182406250 (1ps)

Run the gfsk_demodulation (part of the btle_rx receiver) testbench:

iverilog -o gfsk_demodulation_tb gfsk_demodulation_tb.v gfsk_demodulation.v

vvp gfsk_demodulation_tb

Outputs:

VCD info: dumpfile gfsk_demodulation_tb.vcd opened for output.

Reading input I from btle_rx_gfsk_demodulation_test_input_i.txt

82 read finish for test input I.

Reading input Q from btle_rx_gfsk_demodulation_test_input_q.txt

82 read finish for test input Q.

Reading output ref from btle_rx_gfsk_demodulation_test_output_signal_for_decision_ref.txt

81 read finish from btle_rx_gfsk_demodulation_test_output_signal_for_decision_ref.txt

Reading output ref from btle_rx_gfsk_demodulation_test_output_bit_ref.txt

81 read finish from btle_rx_gfsk_demodulation_test_output_bit_ref.txt

82 input

82 bit output

82 signal for decision output

Save output bit to btle_rx_gfsk_demodulation_test_output_bit.txt

Save output signal for decision to btle_rx_gfsk_demodulation_test_output_signal_for_decision.txt

Compare the gfsk_demodulation_test_output_bit_mem and the gfsk_demodulation_test_output_bit_ref_mem ...

0 error found! output bit Test PASS.

Compare the gfsk_demodulation_test_output_signal_for_decision_mem and the gfsk_demodulation_test_output_signal_for_decision_ref_mem ...

0 error found! output signal for decision Test PASS.

gfsk_demodulation_tb.v:199: $finish called at 112406250 (1ps)

Run the access address searching (part of the btle_rx receiver) testbench:

iverilog -o search_unique_bit_sequence_tb search_unique_bit_sequence_tb.v search_unique_bit_sequence.v

vvp search_unique_bit_sequence_tb

Outputs:

VCD info: dumpfile search_unique_bit_sequence_tb.vcd opened for output.

WARNING: search_unique_bit_sequence_tb.v:43: $readmemh(btle_config.txt): Not enough words in the file for the requested range [0:31].

ACCESS_ADDRESS 11850a1b

Reading input from btle_rx_search_unique_bit_sequence_test_input.txt

81 read finish from btle_rx_search_unique_bit_sequence_test_input.txt

Reading output ref from btle_rx_search_unique_bit_sequence_test_output_ref.txt

1 read finish from btle_rx_search_unique_bit_sequence_test_output_ref.txt

unique_bit_sequence full match at the 41th bit

unique_bit_sequence starting idx 9

Compare the unique_bit_sequence starting idx and the search_unique_bit_sequence_test_output_ref_mem[0] ...

Same as python result 9. Test PASS.

81 input

search_unique_bit_sequence_tb.v:122: $finish called at 111406250 (1ps)

Above Verilog testbench shows that the access address is found at index 9 in the incoming IQ sample stream, and it is equal to the Python script test vector/result. Because the beginning 8 bits are preamble, the access address starts from the 9th bit. We can add 1 sample delay (8 in the oversampling domain) in the channel emulation part of the Python script, and run this access address search testbench again:

cd ../python/

python test_vector_for_btle_verilog.py 2 20 0 8

cd ../verilog/

iverilog -o search_unique_bit_sequence_tb search_unique_bit_sequence_tb.v search_unique_bit_sequence.v

vvp search_unique_bit_sequence_tb

Outputs:

VCD info: dumpfile search_unique_bit_sequence_tb.vcd opened for output.

WARNING: search_unique_bit_sequence_tb.v:43: $readmemh(btle_config.txt): Not enough words in the file for the requested range [0:31].

ACCESS_ADDRESS 11850a1b

Reading input from btle_rx_search_unique_bit_sequence_test_input.txt

82 read finish from btle_rx_search_unique_bit_sequence_test_input.txt

Reading output ref from btle_rx_search_unique_bit_sequence_test_output_ref.txt

1 read finish from btle_rx_search_unique_bit_sequence_test_output_ref.txt

unique_bit_sequence full match at the 42th bit

unique_bit_sequence starting idx 10

Compare the unique_bit_sequence starting idx and the search_unique_bit_sequence_test_output_ref_mem[0] ...

Same as python result 10. Test PASS.

82 input

search_unique_bit_sequence_tb.v:122: $finish called at 112406250 (1ps)

As you can see, the Verilog testbench shows that the access address starts from the index 10 instead of 9 in the previous case, after we add artificial 1 sample delay in the channel.

The argument 2 20 0 8 to test_vector_for_btle_verilog.py means that: example index 2; 20dB SNR; 0 PPM; 8 oversample delay (8 oversample = 1 sample)

Synthesis and Implementation for Xilinx FPGA

Before going to the OpenLan2 in the next section, here we firstly try to map the design into a Xilinx FPGA by Xilinx Vivado 2021.1. Not any paid license is needed because we choose a FPGA model with volume smaller than a certain size. The model is xc7z020 which has been widely used in many FPGA dev boards, such as ZedBoard, PYNQ, etc.

Method to run the Xilinx FPGA project:

- Open Vivado 2021.1, and go to the BTLE/verilog directory by “cd” command in the Tcl Console.

- Run

source ./btle_controller.tclin the Tcl Console to create the project for target FPGA xc7z020 with 16MHz constraints in thebtle_controller_wrapper.xdc - Click

Generate Bitstreamon the left bottom corner to run the FPGA implementation and generate FPGA bitstream.

With the default Xilinx Vivado strategy, the design fits in the FPGA easily without much optimization efforts on the clock speed and area. The main information in the Vivado report:

Utilization:

| Cell | Used | Total in FPGA | Percentage |

|---|---|---|---|

| Slice LUTs | 3563 | 53200 | 6.7% |

| Slice Registers | 2307 | 106400 | 2.1% |

| F7 Muxes | 75 | 26600 | 0.28% |

| F8 Muxes | 16 | 13300 | 0.12% |

| Slice | 1180 | 13300 | 8.87% |

| LUT as Logic | 2687 | 53200 | 5% |

| LUT as Memory | 876 | 17400 | 5% |

| DSPs | 48 | 220 | 21.8% |

Timing:

| Worst Negative Slack (WNS) | Total Negative Slack (TNS) | Number of Failing Endpoints |

|---|---|---|

| 27.701 ns | 0 ns | 0 |

Power:

| Total On-Chip Power | Junction Temperature | Thermal Margin | Effective theta JA |

|---|---|---|---|

| 0.12W | 26.4 C(Celsius) | 58.6C (4.9W) | 11.5 C/W |

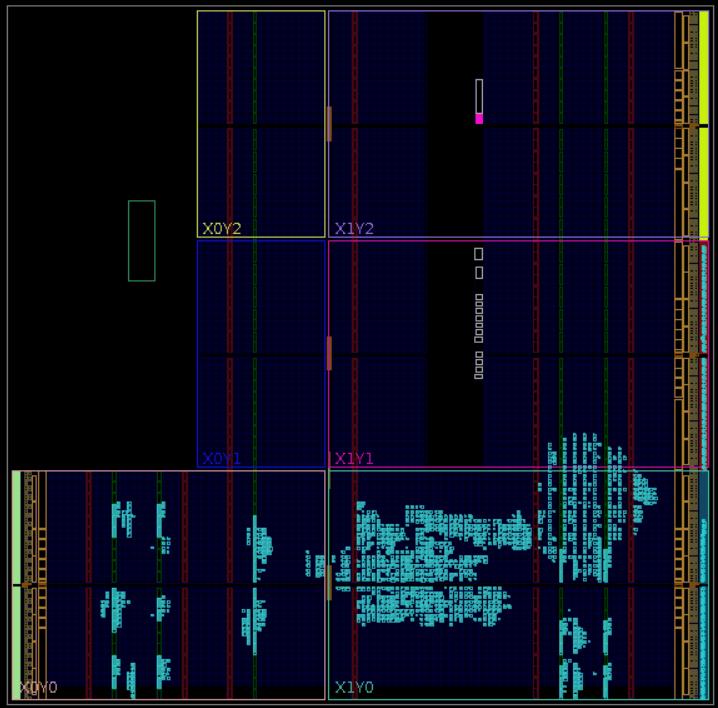

The final floorplanning&routing result:

Run through OpenLane2 SKY130 PDK flow to generate GDSII

To run through OpenLane workflow, please upload and run open_btle_baseband_chip.ipynb in google colab. The flow is mainly learnt/copied from this reference colab file:

https://colab.research.google.com/github/efabless/openlane2/blob/main/notebook.ipynb

For local setup, please follow https://openlane.readthedocs.io/en/latest/getting_started/index.html

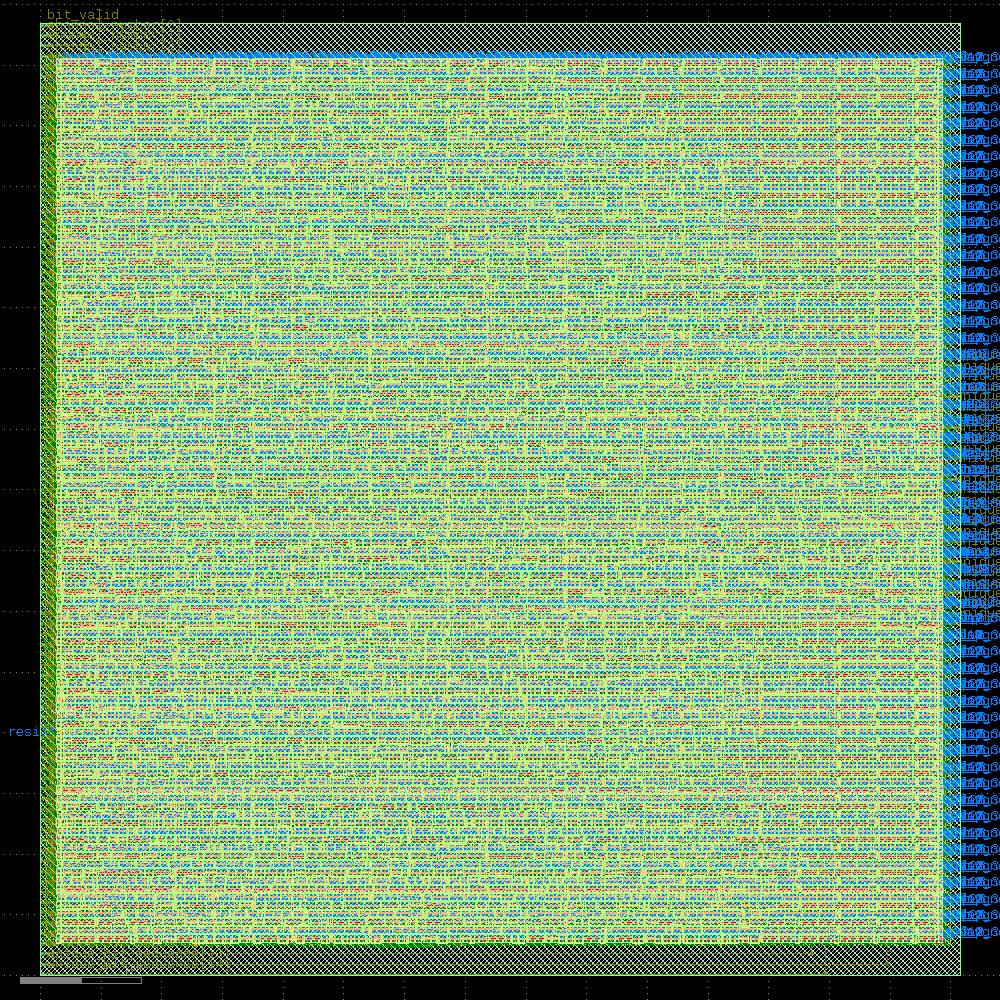

- The GDSII of the btle rx core

Note: Static Timing Analysis (Post-PnR) failed

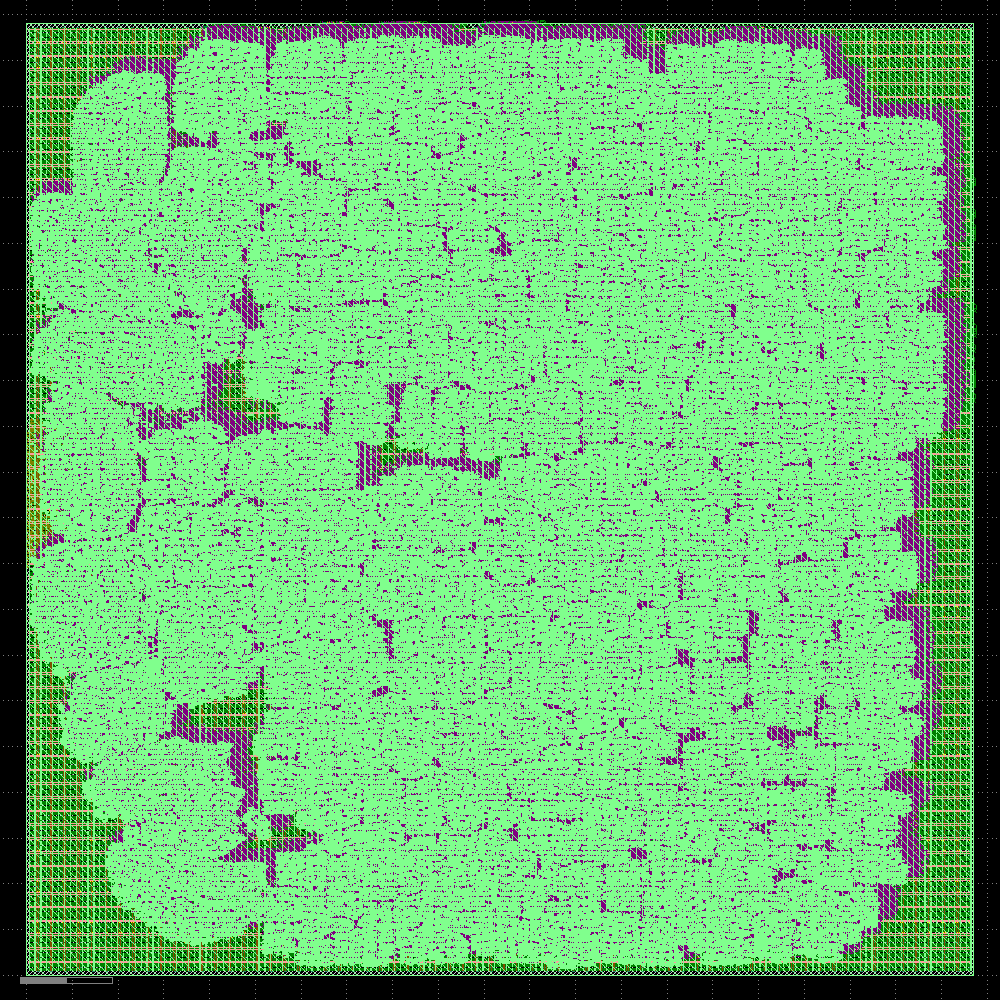

- The Detailed Placement and CTS of the whole project

Note: Global Routing failed

]]>今天把车卖了10413欧元,7年开了145520km,贬值15587欧元。

-

2016年购入,车初次注册是在2015,当初里程21000km,26000欧从宝马二手车购入。

-

2023年卖出,里程166520km,卖给了wijkopenautos.be,10413欧元,贬值15587欧元。

卖车流程很丝滑:

在线输入车辆信息,给出评估价。然后去线下现场验车,核对无误后,给出最终报价。我的最终报价和在线评估价一模一样,因为我在线输入的信息完全准确,而且车辆状态的确也不错。立刻签订合同,卖车款2~3个工作日到账。车行拆下车牌我来带走。

也找了之前买车的宝马店,以及其他本地的二手车收购商。都很磨唧,只给9000到10000欧元。而且不少地方一听说是柴油车就不收。现在政府正在强推新能源,再差也要汽油车,2030年后一切燃油车都就买不到了。柴油车在2024(还是2027?)年后将会禁止进入城市核心/绿色区。想进也可以,交钱。

我和wijkopenautos.be签订的合同对方是在阿姆斯特丹的公司(但总部在德国)。还是荷兰人会做生意啊!还是德国人懂车啊!

卖车后流程:

-

车牌交给邮局,由邮局去政府注销,(不然每年还要交车辆税),而且没收钱。。。?(会有账单吗?)

-

拿邮局的回执去保险公司取消保险。

本文以射频硬件为线索,梳理常见SDR(软件无线电)方案。SDR硬件位于天线和数字信号处理之间,负责把无线电信号数字化,交由主机或者嵌入式系统(FPGA、DSP,MCU)处理。SDR硬件一般包含射频和数字两部分。

这里的射频指广义的从天线到ADC、DAC这部分,包含了高频/射频,中频IF(如果有),零中频,模拟基带,相关的滤波以及混合信号ADC/DAC。之所以不以数字部分为线索,是因为数字部分的实现方案无外乎以下四种:

- 基于FPGA(亦可接主机)。典型代表:USRP系列

- 基于Xilinx Zynq SoC FPGA。典型代表:Xilinx开发板配合Analog Devices的射频板

- 基于USB单片机/MCU。典型代表:HackRF+portapack(NXP LPC4320),孔雀石Malahit(STM32H7)

- 基于主机或者SBC/单板计算机。典型代表:rtl-sdr电视棒、SDRPlay/RSP1、AIRSPY、RX888;KiwiSDR (采用BBB BeagleBone做主机),CaribouLite RPi HAT (采用树莓派做主机)

不难看出,数字部分相对成熟且标准化程度高,这离不开芯片制程和计算机产业的高度发达。

反观射频部分,由于包含高频以及模拟信号处理,数模混合电路ADC、DAC,结构复杂,较少受惠于高级数字电路制程的进步,往往成为各种SDR硬件性能的决定性因素。因此本文以射频方案为主线,介绍以下SDR射频方案:

- PWM射频:无射频的射频

- AD9361、AD9371、ADRV系列

- LMS6002D、LMS7002M、LimeSDR系列

- AT86RF215(Microchip公司支持I/Q采样的zigbee IoT芯片)

- RFFC5072+MAX2837+MAX5864(HackRF的变频加Maxim WiFi套片)

- E4000/R820T+RTL2832、rtl-sdr电视棒系列

- E4000+TLV320ADC3140音频codec(Funcube Dongle)

- R820T+LPC4370(AIRSPY)

- MSI001+MSI2500(SDRplay/RSP1)

- MSI001+NAU8822音频codec+STM32H7 (俄罗斯 Malahit 孔雀石收音机)

- ADL5350+ADF4350 rtl-sdr 2.4GHz变频方案

- 射频直采(RFSoC、KiwiSDR、RX888、RED PITAYA)

- 传统业余无线电射频(uSDX)

正文开始:

PWM射频:无射频的射频

是的,你没看错,“剑法的最高境界,则是手中无剑”。随着数字芯片(FPGA、树莓派、单片机)的I/O速度越来越高,仅靠控制I/O基于PWM(脉宽调制)即可发送射频信号。类似原理,把高速I/O当做高速1bit ADC来用,亦可直接恢复射频信号。

比较早期的可以搜索2007年的这篇“An FPGA Based All-Digital Transmitter with Radio Frequency Output for SoftwareDefined Radio”,利用Xilinx Virtex2pro FPGA上的 MGT(multi-gigabit transceiver)高速引脚实现了800MHz载波,20MHz带宽的64QAM信号发射。临信道泄露比(ACLR)45dB,EVM优于1%。

现在FPGA速度比起2007年快了很多,可以搜索2017年的这篇“Real-time all-digital radio-over-fiber LTE transmission”,利用FPGA上的27.5Gbps的数字I/O配合PWM调制产生了3.44GHz载波上的5MHz LTE信号。

基于类似原理,FPGA的高速I/O口也可以看做是一个高速的1bit ADC,配合FPGA高速PWM输出和比较器,可以实现高速射频ADC。大家可以搜索2021年的这篇“All-digital FPGA receiver: on Intel Stratix 10 TX”。

以上都太高端了?不要怕,只需要很便宜的Lattice MachXO2 FPGA再配合三个电阻一个电容,你也可以在家直接用FPGA直连天线听收音机!大家搜索“FPGA + 3 R + 1 C = MW and SW SDR Receiver”。这个收音机长这个样子:

基于类似原理,人们也开发了利用树莓派的I/O口来发射射频信号的程序。比如发射FM广播,自行搜索“Turn Raspberry Pi into FM transmitter”。比如发射传真图像,自行搜索“Emitting Hellschreiber from a Raspberry Pi GPIO: combining gr-hellschreiber with gr-rpitx”。

AD9361、AD9371、ADRV系列

Analog Devices,我们的老朋友了。AD9361、AD9371、ADRV高集成度射频收发器系列广泛用于各种高端仪器、软件无线电、商用无线通信产品中。支持6GHz以下连续覆盖,带宽可达几十M甚至上百M,各项射频性能优异,灵活可配置。

除了高端商用,在广大软件无线电DIY爱好者中也大受欢迎。毕竟,它一颗芯片把那么大一堆从天线到ADC/DAC所必须的所有处理环节全包括了,而且性能没的说。你只需要给他连接天线和基带,自己在家就能DIY一个性能如此强悍的SDR设备,这在以前是很难想象的。

我们的openwifi(开源WiFi芯片设计)目前也只能跑在FPGA + AD9361的平台上,不得不说这颗射频芯片大包大揽、性能优异且全面。

基于Analog Devices高集成度射频收发器的SDR产品不完全列表:

- USRP B系列

- USRP E系列

- USRP N3xx系列

- Analog Devices FMCOMMS系列射频板

- Analog Devices SoM模块 ADRV9361-Z7035,ADRV9364-Z7020

- PlutoSDR

- BladeRF二代

- EPIQ Sidekiq系列

- 国产 gridrf NH7020

- 国产 MicroPhase 微相 系列

- 国产 SDRPI

- 国产 Pluto plus

- 国产 Pluto Zynq7020增强版(LibreSDR)

- 国产 PYNQSDR PYNQ-Z1 + AD936X SDR

- 国产 Neptune SDR (B站 薛定谔的猫power)

- 国产 类AD9361芯片

- 等等

LMS6002D、LMS7002M、LimeSDR系列

Lime microsystems是一家英国公司,LMS系列是对标Analog Devices的AD93xx系列芯片。许多人熟知的LimeSDR系列设备即是基于LMS7002M。BladeRF第一代是基于LMS6002D(第二代转投AD9361了)。

LMS系列射频芯片几乎是市场上可公开购买到的AD9361唯一竞品,所以它存在的意义巨大。AD9361系列只是庞大的Analog Devices产品线和营收中的一小部分,而LMS射频芯片就是Lime microsystems公司的全部身家。

根据Lime microsystems公司官网,变频器LMS8001+已经支持到12GHz和120MHz带宽,LMS9000将会支持到100GHz和>2GHz带宽。

期待Lime microsystems公司越来越好!

AT86RF215(Microchip公司支持I/Q采样的Zigbee IoT芯片)

这是Microchip公司(就是出ATMEL单片机那家)的一款IoT芯片,支持sub 1GHz和2.4GHz的Zigbee标准,FSK、OFDM、O-QPSK。

它之所以出现在SDR射频方案里,是因为它在标准协议处理之外,也提供了ADC/DAC的IQ采样接口,因此也可以作为通用SDR射频芯片。支持射频带宽2.5MHz和采样率4MHz。

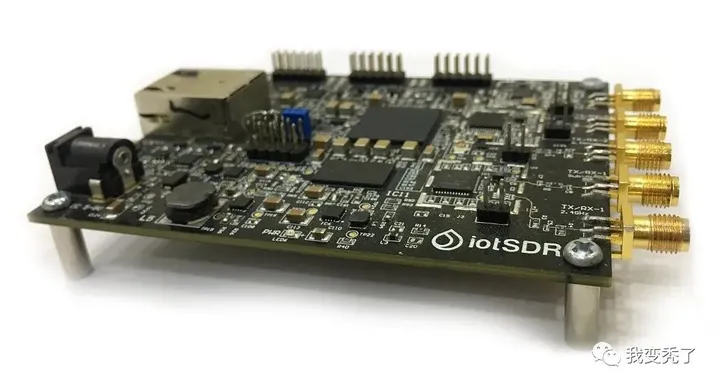

iotSDR采用了AT86RF215 + Zynq 7010的方案,可以看做是把PlutoSDR里的AD9363替换为了更低端的面向IoT的射频前端。

CaribouLite RPi HAT则是把AT86RF215做成了树莓派扩展板的思路,可以直接插在树莓派的IO插座上,构成完整系统。

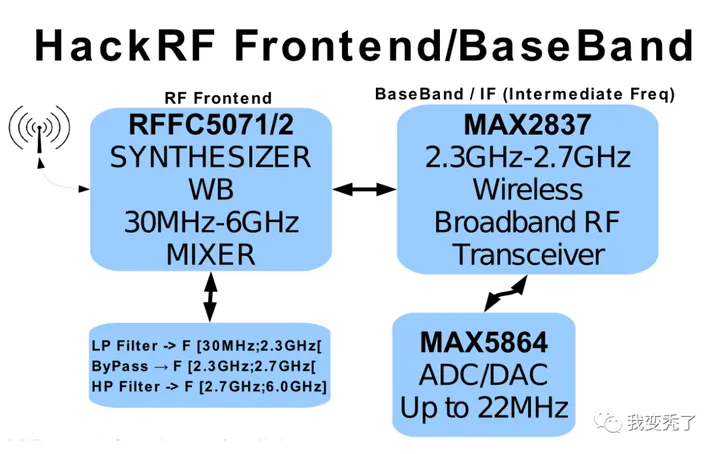

RFFC5072+MAX2837+MAX5864(HackRF的变频加Maxim WiFi套片)

这是HackRF的射频方案。它实际上是Maxim公司(已被Analog Devices收购)的WiFi射频方案前面加上一个Qorvo的混频器RFFC5072。它将6GHz范围内的目标频率变频到2.4GHz之后,由成熟的2.4GHz WiFi射频套片(MAX2837+MAX5864)接手。

大家也可以上Maxim Integrated公司网站搜索类似的WiFi射频套片方案。使我惊奇的是,这样的射频和ADC/DAC分开的WiFi套片方案,比起当今的许多WiFi芯片指标并不高(或者可以说弱),而且板子面积还大,但仍旧是持续生产的状态,可谓是十分良心了。但,主要客户群是谁呢?

E4000/R820T+RTL2832、rtl-sdr电视棒系列

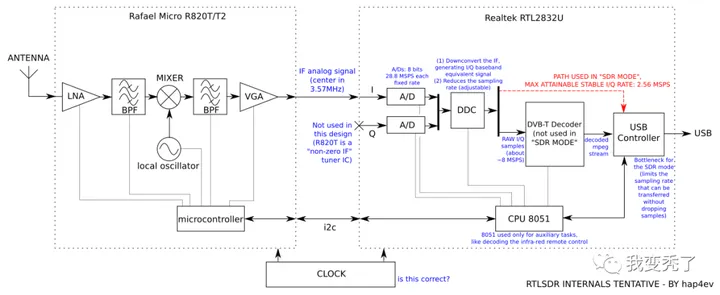

rtl-sdr即大名鼎鼎的电视棒。频率覆盖到1.7GHz左右,采样率和带宽最大约3.2MHz。电视棒由调谐器芯片(E4000或R820T,频率范围略有不同)和带有ADC、DVB-T解码器、USB的数字芯片RTL2832U构成(含8051单片机)。调谐器芯片又叫tuner。用过古老电视机的都知道,就是你拧动一个旋钮,就把电视调谐到不同的频率上,调谐器tuner的名字由此而来。

对于软件无线电应用来说,数字芯片里的DVB-T解码器是多余的。这里3.2MHz的带宽/采样率主要受制于RTL2832U数字芯片,毕竟它不是为串流I/Q采样到主机这种SDR应用而设计,怀疑是因为固件上的USB性能有一定瓶颈或内部数字中频架构限制。调谐器tuner芯片R820T并不是带宽限制因素,因为通常一个电视频道为8MHz,为了看电视必须有接收8MHz带宽信号的能力。

由于电视棒极其低廉的价格,群众基础十分庞大,资料也非常多,这里不再用过多篇幅介绍。主要介绍下面几种基于电视棒方案/芯片的各种SDR衍生设备。

E4000+TLV320ADC3140音频codec(Funcube Dongle)

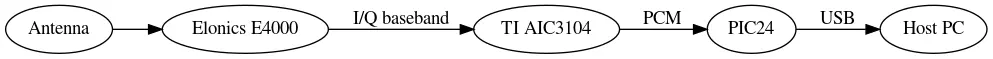

既然常规电视棒里的DVB-T解码器在软件无线电里并不需要,那么可不可以只用那颗调谐芯片配合更简单的数字芯片来打造一款专门的SDR dongle呢?

Funcube Dongle就是采用这种设计。它使用TI的一颗双声道音频codec充当E4000输出的零中频基带I/Q信号的ADC,然后用Microchip公司的PIC24F USB单片机连接到主机。受限于音频codec ADC采样率,它的最大射频带宽约80KHz。

可能由于E4000这个tuner芯片太老了,据说新版的FUNcube Dongle Pro+已经换用MSI001 tuner芯片。参见后面的“MSI001+MSI2500”章节。

R820T+LPC4370(AIRSPY)

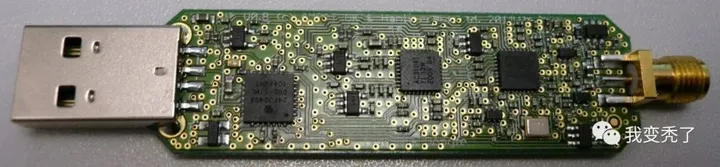

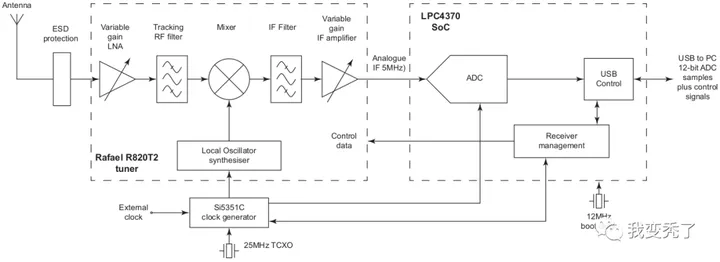

既然rtl-sdr电视棒的采样率/带宽主要受限于数字部分的RTL2832U,而不是调谐器tuner E4000/R820T,那么将数字部分替换为专门的ADC和USB芯片不就完全解锁了tuner的能力?的确,AIRSPY这个SDR设备就是这么做的。

可以看到,它在tuner之后直接接了一个带有80Msps高速ADC的NXP的单片机LPC4370,因此它直接可以支持到大约10MHz带宽(这时就受限于tuner芯片了,单片机80Msps采样率已完全超出tuner芯片带宽范围)。

MSI001+MSI2500(SDRplay/RSP1)

这是另一种电视棒套片。MSI001是tuner芯片,MSI2500是含ADC、USB等的数字芯片。神奇的是,这个MSI2500数字芯片并不包含电视解调解码器!(还记得rtl-sdr里的数字芯片RTL2832U里是包含了我们并不需要的电视解码器么?)

在MSI的电视棒方案里,电视信号解调解码完全是在主机端用软件做的,也就是这个电视棒本身就是个纯SDR方案。这也意味着这套SDR方案可提供的带宽必然超过8MHz,因为要在主机端软解码电视信号,必然意味着需要将一个电视频道的8MHz带宽I/Q全部实时采集到主机供软解码程序使用。实际上它最大可提供大约10MHz的带宽能力,频率覆盖到约2GHz。

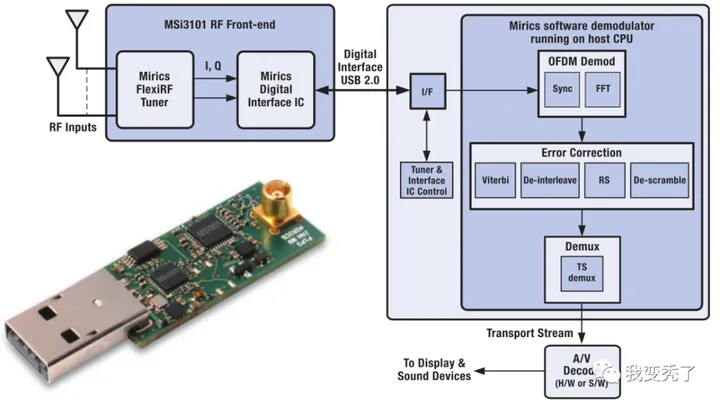

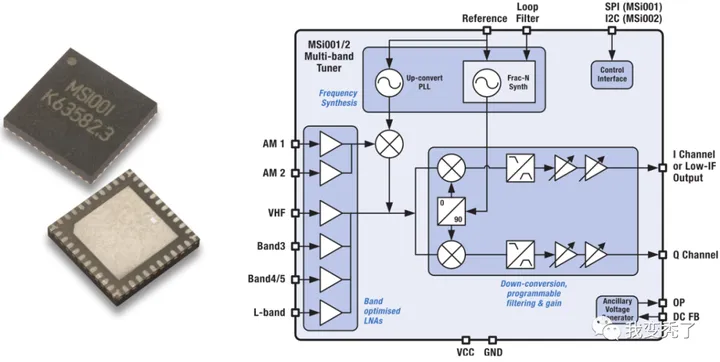

上图中,USB2.0接口左侧就是MSI001+MSI2500构成的电视棒,右侧就是主机侧的电视信号解调解码软件部分。MSI001 tuner内部结构如下。

由于这个方案本身就是SDR的,不难想象用它来DIY SDR设备是多么方便,SDRplay/RSP1及其众多的变种(包括众多国产版)就是基于这个方案。

这家Mirics Semiconductor公司貌似有点神秘,如果你访问他们的网站Mirics,只会得到以下信息:We are sorry but we do not offer direct support to end users. Please address any queries you may have to the supplier of your hardware。

从有限的互联网信息来看,这又是一家英国公司,创始人曾经在LSI Corporation 和 Analog Devices 工作。

MSI001+NAU8822音频codec+STM32H7 (俄罗斯 Malahit 孔雀石收音机)

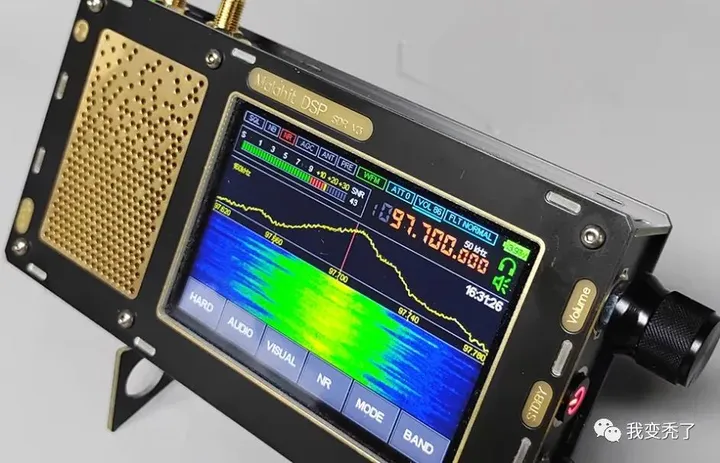

著名的来自俄罗斯的Malahit孔雀石收音机也是基于上述MSI方案,但它只采用MSI001作为射频前端,其输出的I和Q两路信号(每一路均为差分)连接至音频codec NAU8822,然后连接至主控:性能强劲的主频480MHz的STM32H7单片机。这种SDR完全摆脱对主机的依赖,大屏幕加解调以及声音播放全在掌中完成。以下是国产版孔雀石收音机:

ADL5350+ADF4350 rtl-sdr 2.4GHz变频方案

各种电视棒方案价格真香,美中不足的是频率覆盖一般都在2GHz以下,那么如何才能玩起更高频段,例如2.4GHz ISM频段,且仍可利用电视棒呢?那就是各种变频方案了。也就是通过混频器将2.4GHz(或者更高频信号)变频到电视棒覆盖的频率范围内,之后交由电视棒。

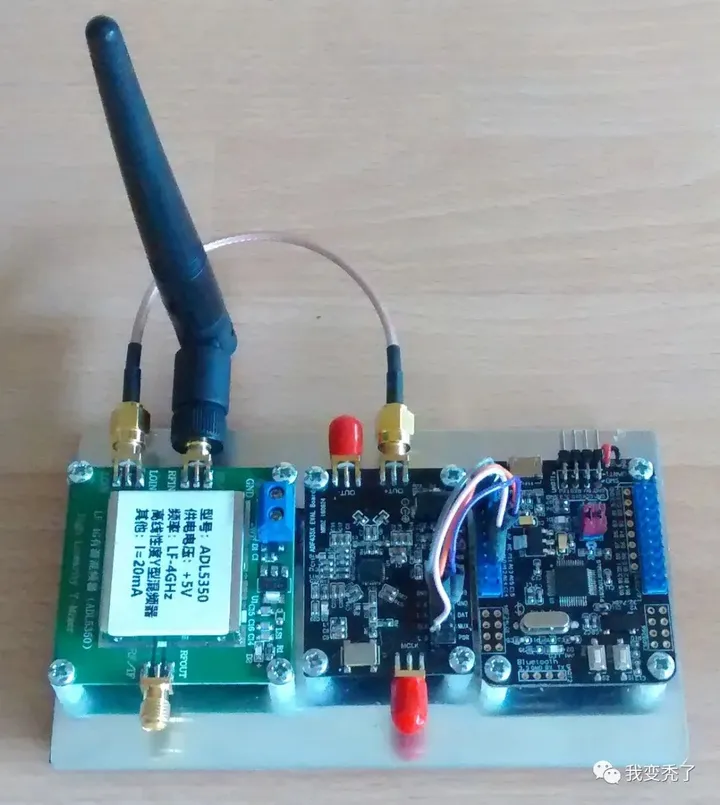

自行搜索“A LOW COST 2.4 GHZ DOWNCONVERTER FROM OFF THE SHELF DEV BOARDS”,你可以找到这个基于现成Analog Devices评估板的ADL5350+ADF4350 变频方案。ADL5350为混频器,ADF4350为本振,此外还需要一个STM32单片机来对这两颗芯片进行控制,最终完成完整的2.4GHz到1GHz的下变频功能。

射频直采(RFSoC、KiwiSDR、RX888、RED PITAYA)

射频直采可以说是SDR的“圣杯”了。概念上,只要ADC/DAC采样率够高,可以直接接上天线收发空中的信号。对于地面和卫星通信中常用的几GHz甚至几十GHz的信号,射频直采需要非常高速的ADC/DAC,这属于是“禁运”级别的高科技了。请自行搜索Xilinx RFSoC开发板以及USRP X410设备。这些设备采用基于Xilinx RFSoC直采架构FPGA,可以覆盖到6~8GHz载波频率,并提供400MHz的带宽,壕无人性!据说Intel/Altera正在憋大招,会推出一下子能够直采毫米波的射频FPGA!

但对于中波、短波、调频广播以及业余无线电玩家常用的几十MHz频率的信号来说,用ADC直采已经是“飞入寻常百姓家”了。这其中知名设备有KiwiSDR,RX888,RED PITAYA。

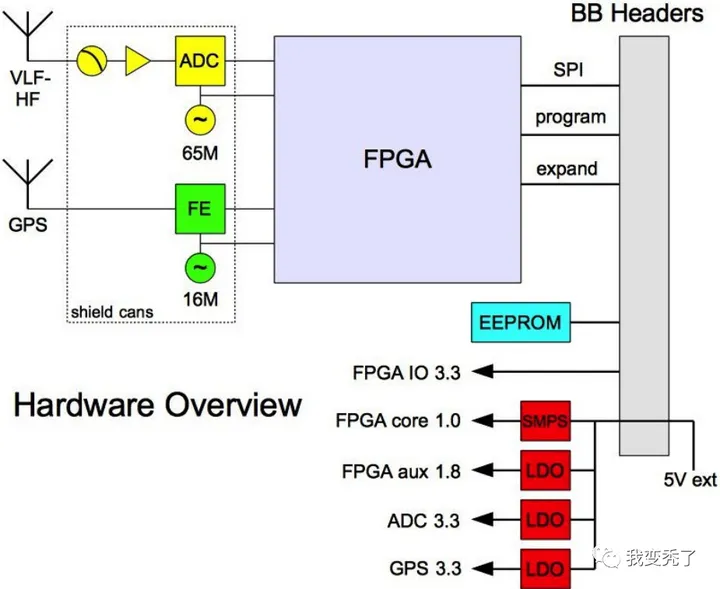

KiwiSDR直接用了一个65Msps的LTC2248 14-bit ADC来采集30MHz以下的HF信号,并通过FPGA连接到BBB(BeagleBone)单板计算机上。主要用途还是窄带业余无线电。

RX888 16BIT ADC SDR Receiver。

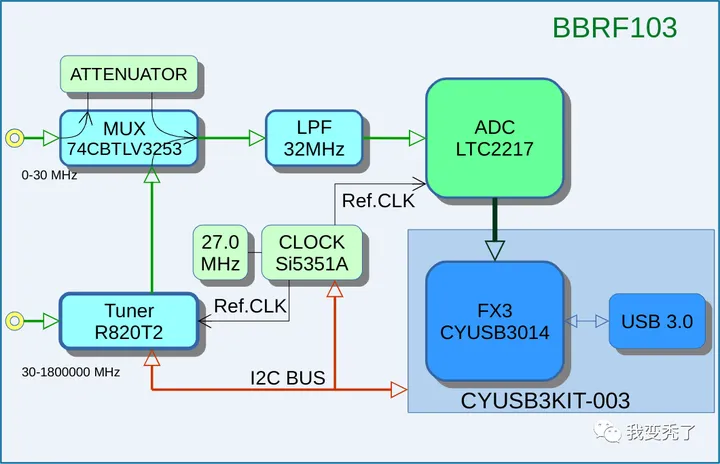

看照片应该是出自国人之手。但据说原始设计是基于BBRF103。

在BBRF103的设计中,使用LTC2217 105Msps 16-bit A/D来直采30MHz以下信号,30MHz以上信号仍旧采用电视棒的R820T来下变频至30MHz以下,以供ADC采集。

RED PITAYA主要用途是测量仪器,但由于它提供了高速ADC串流采样到主机的功能,也被很多人用来直采较低频率的射频信号构成SDR接收机。

此外,某些不知出于何种目的的单片机竟然自带了80M采样率的ADC,理论上可直接收听FM广播以及频段更低的所有广播和业余无线电信号(前提是信号或者天线够强。。。)。对,NXP LPC4370说的就是你!相关单片机直接听广播的方法请大家自行搜索“LPC4370 SDR receiver”。当然它也很适合直接作为软件无线电的中频或者基带数字部分,比如前面提到的AIRSPY。

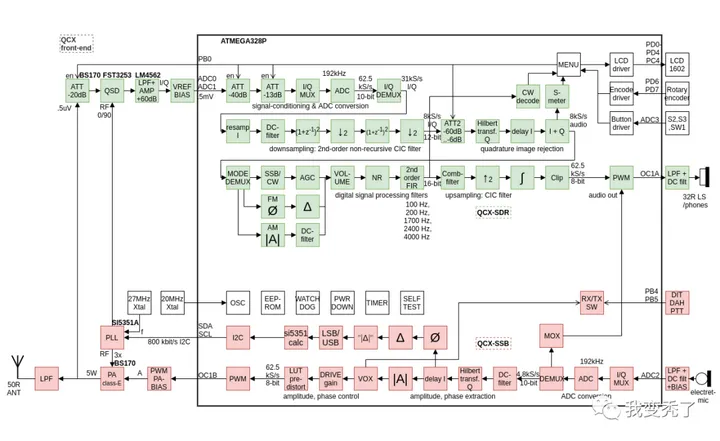

传统业余无线电射频(uSDX)

射频和纯模拟解调是业余无线电传统艺能。当有了计算机,爱好者就开始在超外差接收机中采用低中频12KHz,然后连接到电脑声卡玩SDR。现在有了性能强劲的带有ADC的单片机,电脑也就不需要了,因为调制解调、音频全都可以在单片机内搞定。

为了产生低中频,可以采用传统模拟混频器,也可以采用所谓开关式混频器(类似D类功放?),也叫Quadrature Samplimg detector。它直接将射频信号通过一个高速开关(类似于窄带欠采样的概念)变至低中频。下图中的FST3253高速开关(QSD)就是用作下变频,然后低通滤波加放大后进入ADC。此即最近比较火的“uSDX: micro Software Defined Transceiver”

以上基本总结了现在能看到所有SDR方案。有没有被我漏掉的?欢迎留言补充!

]]>MSV-ATC卫星移动通信技术研究

焦现军 曹桂兴

(中国空间技术研究院,北京100094)

摘 要 介绍了卫星移动通信的最新技术——辅助地面组件(ATC)。ATC技术主要由美国 移动卫星风险公司(MSV)研发,它是用于卫星移动通信系统的一系列新技术的集合,代表了卫星 移动通信的最新发展方向。采用了ATC技术的卫星移动通信系统可以为密集城市和室内用户提 供通信服务,从而实现卫星移动通信真正意义上的无缝覆盖。文章主要从发展历史、系统原理和关 键技术三方面对ATC技术进行了研究。

关键词 卫星移动通信 ATC最新进展

Introduction of MSV—ATC Technology in Mobile Satellite Systems

JIAO Xianjun CAO Guixing

(China Academy of Space Technology,Beijing 100094,China)

Abstract:Ancillary Terrestrial Component(ATC)technology,which is a powerful candidate of future mobile satellite systems(MSS),is introduced.ATC is deVeloped by Mobile Satellite Ven— tures(MSV)Corporation and represents a group of new technologies involved in MSS. The group of technoIogies give the MSS ability to serve subscribes in dense urban and indoor environ— ments,and bring the ubiquitous coverage of MSS into reality. We study three aspects of ATC: development history,system principle and key technologies.

Key words:MSS;ATC;new development

]]>过早优化是万恶之源。

]]>站在21世纪后4/5开始之际,回望前段时间发布的openwifi项目(https://github.com/open-sdr/openwifi),对个人而言,再一次提升了自己的能力边界。对社区而言,我想说这是中国开发者给Wi-Fi研究领域的一点点基础性贡献。

正文:

Wi-Fi已经诞生20多年了。如今,Wi-Fi就像空气和水,视而不见却如影随形。一方面它是连接互联网的”生活必需品”,另一方面,因为十分低廉的硬件价格和无线电信号的开放性,也成为黑客们最喜欢的研究对象之一。

这里的黑客也包括各大学和研究机构的研究员们。他们的利用Wi-Fi开展了各种”脑洞”研究。比如从Wi-Fi信号里窃取或保护你的隐私,2015年央视315晚会上现场从Wi-Fi信号中获取的用户隐私展示仍历历在目。近几年,Wi-Fi信号甚至被用来探测物理世界里人的活动。MIT的研究人员利用无处不在的Wi-Fi信号实现了穿墙无源雷达,可以”看到”墙后面的人。其他”脑洞”研究还有比如利用Wi-Fi信号给低功耗物联网芯片供电等。这些有趣的研究工作大都是基于商用芯片或者软件无线电(SDR)。基于商用芯片主要采用开源驱动,反向驱动或固件的手段(比如github上的nexmon项目)。SDR在这些研究中一般“仅接收”或者“仅发射”,而不是”实时收发”。因为像商用Wi-Fi芯片那样实时收发对于SDR并不容易,原因后面会讲到。

说到软件无线电(SDR),在无线通信和安全研究领域它是一种重要的手段。SDR的基本思想是用软件实现无线通信硬件(比如芯片或基站)的功能。比如在移动通信领域,2G/3G时代的gsm监听以及伪基站,就是基于OsmocomBB发布的SDR硬件和软件。到了4G/5G时代,著名的SDR项目(LTE/5G软基站和UE)有:开源的srsLTE,OAI(Open Air Interface),以及非开源但性能逆天的Amarisoft(法国传奇黑客Fabrice Bellard出品)。

反观Wi-Fi领域,廉价/免费的且广为应用的开源设计却几乎没有。难道写出一个几块钱的Wi-Fi芯片功能的软件,比移动通信(2/3/4/5G)基带芯片更难?这种现象背后的原因涉及到Wi-Fi和移动通信频谱性质以及设计哲学的不同。

Wi-Fi工作在共享频谱(比如免费的2.4GHz和5GHz ISM频段)。在这个频谱中,不只Wi-Fi一种通信系统,蓝牙和Zigbee等也使用同一频谱,因此各国的无线电法规大都要求共享频谱中的设备采用Listen-Before-Talk (LBT)方式工作,即:发射前,先监听信道确保当前信道是空闲的,避免干扰到其他设备正在进行的传输。基于类似考虑,当一个设备发送完一个数据包之后最好能立刻从对方的反馈(ACK)中知道成功与否,这样可以缩短一次传输对信道的占用时间。因为,如果对方需要较长的时间才能给出反馈,那么其他设备则必须等待,此时信道白白浪费。如果其他设备不等待,那么这个关键的反馈信息可能被其他设备的传输所阻塞/干扰,其本身的不确定度加大,这是协议所不希望看到的,因为反馈信息对于协议是重要的状态信息。所以在尽可能短的时间内立即反馈成为一种简单有效的策略,它也可以避免芯片记录太长时间的状态/队列信息,从而减小片上RAM,降低成本。因此Wi-Fi协议规定如果成功接收到一个数据包(CRC校验通过),则需要在SIFS(Short Inter Frame Space)时间内发送给对方ACK包,这样对方就知道自己发送成功,可以发下一包了。如果对方在SIFS时间内没有接收到ACK包,则认为自己发送失败,对方会根据高层设置决定立刻重传(高层设置最大重传次数)或者放弃。SIFS在2.4GHz频段为10us,在5GHz频段为16us,在60GHz频段则更短。极短的SIFS时间保证了两个Wi-Fi设备每次通信对信道的占用是”连续”的(因为SIFS远小于包时间长度)。两个Wi-Fi设备的一次交互(数据包+ACK)未完成前,信道中的其他设备都会安静等待,但因为SIFS很短,这种等待造成的浪费并不严重。这种快速ACK只是Wi-Fi CSMA/CA MAC协议的一小部分,但对芯片来说也是收发时延要求最苛刻的部分。Wi-Fi标准中详细描述了CSMA/CA协议的各方面功能,确保信道共享公平且高效。这里不进一步展开。

与Wi-Fi不同,移动通信使用昂贵的授权频谱,追求最高的频谱利用率。所有终端的发送和接收都受到基站的管理和控制。发送(包括发送ACK这种反馈)都是被基站按时调度的。比如LTE的HARQ(混合自动请求重发)过程就规定,收到一个1ms的subframe数据后,给对方的反馈是调度在4ms之后的,这个反馈延迟比起Wi-Fi的10us那就长很多了。当然,这4ms之内信道并不会闲着,基站会调度有需要的终端继续发送/接收新数据。对频谱的调度式占用一般说来会比随机竞争效率更高,因为无需竞争信道时的随机等待时间。4ms的反馈延迟,也留给接收方足够的处理时间。

请记住这两个数字10us和4ms,他们决定了能否方便的用电脑软件来实现无线通信协议。最流行的软件无线电架构是电脑加射频前端(USRP/BladeRF/HackRF等)。他们之间的连接方式主要有pcie,以太网和USB这三种方式。目前主流射频前端(比如USRP)和电脑的通信延迟最快都在几百us量级。所以对于移动通信,可以利用电脑强大的处理能力按照协议要求从容计算,然后在规定时间之内发送反馈(比如LTE的4ms反馈延迟)。反而对于Wi-Fi的10us SIFS 反馈延迟,基于电脑软件的实现则变成了几乎不可能完成的任务。(我用了”几乎”,不是完全没可能,请看到最后)。

因此,用软件无线电(SDR)去实现Wi-Fi的合理选择是用FPGA芯片直接连接射频前端芯片。调制解调以及CSMA/CA MAC层都在FPGA内部实现,那么是可以实现10us的ACK反馈延迟的。这也是商用Wi-Fi芯片的实现方式,只不过商用Wi-Fi芯片从成本和功耗角度考虑不会使用FPGA而是设计并流片ASIC。

用基于FPGA的软件无线电平台去实现完整的Wi-Fi协议理论上不存在问题,问题是这世界上熟练的FPGA工程师数量估计不到软件工程师数量的百分之一。而开发同样一个功能模块,软件的开发时间可能是FPGA开发时间的几十分之一。因此,并不是说Wi-Fi的协议有多么复杂,而是用FPGA来开发Wi-Fi比起软件更耗时耗力。

以上只是介绍了Wi-Fi实现的一个关键点,并不是Wi-Fi实现的全部。要实现一个完整的Wi-Fi芯片功能,仅用FPGA实现Wi-Fi标准定义的OFDM调制解调是远远不够的,还需要MAC功能(CSMA/CA),驱动和上层协议软件(Association/Authentication/Encryption/etc)的支持。

以目前广泛应用(尤其在嵌入式,路由器和手机领域)的Linux操作系统为例,在 https://wireless.wiki.kernel.org/ 详细描述了它是如何支持Wi-Fi芯片的。Wi-Fi芯片厂家只需要按照Linux的要求提供Driver(即驱动程序,面向Linux内核实现Linux预定义的ieee80211_ops API)。在驱动之上,Linux内核提供了Wi-Fi的高层MAC支持(mac80211,cfg80211),链路速率自动调整功能,user space和kernel space的通信接口nl80211,以及user space丰富的工具软件,比如作为station模式的wpa_supplicant和作为AP模式的hostapd。因此,借助Linux对于商用Wi-Fi芯片的支持,可以减轻很大部分基于FPGA的Wi-Fi实现的工作量。即便如此,基于FPGA的Wi-Fi实现依然涉及到调制解调之外的许多工作。主要有:

- 克服本振泄漏等零中频收发机自干扰(目前流行的射频前端架构是零中频)。因为Wi-Fi收发频率是相同的,即TDD半双工。这涉及到FPGA数字中频的一些设计考量。

- 实时获取射频前端AGC增益,并根据I/Q采样值大小计算RSSI。因为Linux高层需要RSSI报告。RSSI在不同频率和射频通道/天线可能需要不同的校准/补偿值。RSSI也是判断信道是否被占用的重要依据,是CSMA/CA协议的基础。

- 在FPGA上实现完整的CSMA/CA协议(Low MAC层)。根据Linux wireless的设计,Wi-Fi的MAC层分为实时的low MAC (比如SIFS,ACK,重传,CCA,NAV,backoff等CSMA/CA操作)和非实时的high MAC。Linux的mac80211子系统负责high MAC,但low MAC必须由FPGA实现,因为Linux的实时性不足以实现us量级的精确时延,此架构即典型的SoftMAC Wi-Fi芯片。还有一种Wi-Fi芯片类型是FullMAC芯片,此时high MAC也在芯片里而不是Linux中。这种芯片厂家不必依赖Linux mac80211子系统的high MAC实现,有更大的性能优化自由度,当然芯片开发也需要更多人力物力。

- FPGA和Linux的通信接口。比如Wi-Fi包的DMA接口,FPGA寄存器配置和状态接口。需要在Linux驱动里去响应Wi-Fi包的收发中断,以及访问FPGA寄存器。

- FPGA内发包队列的管理。因为Linux Driver把包交给FPGA后,FPGA需要等待合适的发送时机(CSMA/CA里的TXOP),因此必须先把包缓存到队列中。此队列需要被Linux Driver查询和操作。

- Linux Driver和FPGA交互所需的各种信息(RSSI,timestamp,序列号等)的插入和提取,因为这些信息是Linux上层所需要的。有些信息跟随数据包,有些信息通过寄存器交换。

- Linux Driver(驱动)的编写。驱动程序需要综合调用FPGA和射频前端的各种功能接口,实现Linux mac80211子系统预定义的ieee80211_ops API。

- 基于nl80211的user space工具的编写(例如openwifi里的sdrctl)。如果你想从user space实时访问/配置一些driver/FPGA/射频底层功能的话,则需要通过nl80211接口与内核中的驱动程序通信。

从上面的介绍可以看到,看似简单的Wi-Fi芯片,”全栈”开发是必须的。在商业公司内部,Wi-Fi的实现需要一个工程团队密切分工合作,并假以年计来完成。但在研究领域,具有完整和丰富工程经验的学生和老师/研究员并不多,实现完整Wi-Fi对于大多数博士生来说是投入产出比太低的工作,这也是为何在研究领域鲜有完整Wi-Fi实现的原因。

openwifi项目的目标就是要为研究领域提供一个完整的Wi-Fi基带芯片/FPGA实现。这样广大博士生和研究人员就无需Wi-Fi实现所需的巨大投入,直接进入产出阶段。目前openwifi第一版基于Xilinx的Zynq FPGA实现(SoC,System on Chip)。这款FPGA内嵌了ARM处理器,可以跑Linux,即可以提供我们所需要的mac80211子系统,而且开发板可以方便的连接Analog devices的射频前端(例如AD9361)。因此在这个Xilinx加Analog devices的SDR平台上,射频,FPGA,ARM和Linux都齐了。因为是基于ARM处理器,并且ARM和FPGA在一颗芯片内(SoC),所以openwifi的设计也很适合用于嵌入式领域(无人机图传,Wi-Fi视频会议,IoT等)。

需要说明的是,虽然openwifi实现了”全栈”,但它并不是每个部分都从头开始,比如OFDM接收机模块就是在openofdm开源项目之上修改,添补,整合而来。下面梳理一下社区多年来不同研究者在Wi-Fi实现上的各种尝试,以及openwifi与他们的异同。

- WARP 802.11 design

https://warpproject.org/trac/wiki/802.11, https://mangocomm.com/802.11-mac-phy/

这是rice大学很早就发布的基于FPGA的Wi-Fi参考设计。需要购买license才可以使用,费用大约30k欧左右(根据需要哪部分),费用不包括自己购买板子(比如ADRV9361-z7035)的费用。从公开资料来看,它并没有使用Linux mac80211子系统,而是high MAC和low MAC用FPGA里的MicroBlaze软核处理器实现。

- National Instruments Labview 802.11 framework

http://www.ni.com/product-documentation/53279/en/, http://www.ni.com/product-documentation/54094/en/

来自大厂NI的基于FPGA的参考设计,除了这套集成在Labview的设计的license费用(6k欧左右)之外,你还需要购买9k欧左右的高端USRP-2944才能跑起来。从公开资料来看,它也没有使用Linux mac80211子系统,而是使用PC上的NS3网络仿真器作为high MAC,FPGA内实现low MAC。因为绑定Labview,所以开发环境必须Windows。

- Microsoft SORA和ziria

https://github.com/microsoft/Sora, https://github.com/dimitriv/Ziria

微软亚洲研究院n年前发布的一个Wi-Fi实现。物理层和MAC全部使用PC上的软件实现,他们在多年前就做到了我之前说的“几乎”不可能。他们设计了一块pcie的RCB(radio control board,FPGA实现低延迟pcie接口)卡和射频板相连,这说明pcie延迟实际上足够低。项目的水平的确逆天,至今无人超越。涉及大量PC架构下的优化技巧(查表替代计算,缓存替代计算等),以及如何在Windows操作系统下隔离出几个核专用于高实时处理。RCB板加射频板大约2.5k欧的样子。许多高校也在此架构基础上做了4G/5G实现。在项目创始人Kun Tan离开去了华为之际,SORA放到github上开源了。但貌似github上开发活动并不活跃也基本不再更新。后来微软的两个老外设计了一种DSL(Domain Specific Language),并用这种新语言重写了SORA架构下的Wi-Fi实现。但即使用了DSL,要想实现最严苛的SIFS延迟,还是需要用基于pcie的RCB卡,使用其他接口的射频前端(例如基于USB的BladeRF和USRP)满足不了SIFS反馈延迟。公开资料来看也并没有使用Linux mac80211子系统。(Windows实现可能用Linux内核里的子系统么?)

- mobicom 2017上北大Haoyang Wu的FPGA实现(tick)

http://metro.cs.ucla.edu/papers/mobicom17-tick.pdf

在2017年美国盐湖城mobicom会上,北京大学Haoyang Wu 发表了 The Tick Programmable Low-Latency SDR System。印象中还获得了community贡献奖,因为这是社区首次有人基于FPGA实现了全栈Wi-Fi,工作相当硬核,和我们的实现架构也非常接近。主要的不同是,他们做了USB3.0接口把FPGA接到电脑上,然后使用电脑上Linux的mac80211子系统,也就是说他们的驱动是基于USB3.0接口。而我们的openwifi是”全栈”都在单芯片上(SoC):Linux跑在片上ARM处理器,通过Xilinx AXI DMA通道和片上FPGA相连。因为省去了USB这种PC接口,端到端延迟更低。如果你用ping来测试openwifi,并与其他基于USB/pcie的Wi-Fi网卡对比,就会发现片上全栈的延迟更低。此外,在mobicom上作者貌似提到过将来会开源,但两年过去了依然没有任何消息。

- gnuradio/USRP/RFNoC社区

这是目前最大最活跃的SDR社区。但对于Wi-Fi大都是一些小模块实现或者为了写论文的一些快速原型,没有相对完整的模块级和系统级实现。在USRP的FPGA开发框架RFNoC(RF Network on Chip)提出的初期,曾经在一个ppt Building an OFDM receiver with RFNoC 中提到过基于RFNoC框架在USRP的FPGA中实现Wi-Fi接收机,不过貌似始终没发布一个相对完整的RFNoC Wi-Fi接收机模块。而且RFNoC开发门槛还是略高,本来使用FPGA原厂工具链开发FPGA已经有一定门槛,使用RFNoC则还需要在FPGA基础架构上再多一层,涉及到和gnuradio companion的通信和控制接口,需要对整个USRP和gnuradio的概念有比较深入的了解。因此RFNoC框架中开发者贡献的IP core并不多,猜测RFNoC主要还是Ettus公司内部开发USRP上的FPGA用。

- gr-ieee802-11项目

https://github.com/bastibl/gr-ieee802-11

这是Bastian Bloessl基于gnuradio的802.11实现。这是一个相对完整的和应用较为广泛的软件无线电Wi-Fi实现。因为使用PC上的gnuradio,所以不可能实现真正的SIFS反馈延迟以及和商用Wi-Fi芯片通信。作者很清楚这一点,也给出了一些workaround,比如关闭ACK机制。该项目对于不熟悉FPGA而是想从gnuradio入门的人来说,是一个可以很快上手并且根据需求二次开发的不错的选择。而且一台PC加一块廉价的SDR前端(HackRF,BladeRF,USRP B系列N系列等)即可工作。

- openofdm

https://github.com/jhshi/openofdm

这是近两年开源的一个面向USRP N210的Wi-Fi FPGA接收机完整实现!作者是中国人Jinghao Shi (史经浩)。两年前有openwifi项目的想法时,老老实实的先开发了Matlab的Wi-Fi接收机算法,然后打算进行FPGA实现。后来偶然间发现openofdm项目,看了它的文档感觉相当靠谱,于是决定采用。openofdm采用了面向N210内部小容量FPGA的一种高度优化的设计,因此付出了一些解调性能上的代价,但对于第一版以验证全栈集成为主要目的openwifi来说够用了。使用过程中也发现了一些openofdm的bug和缺失的一些全栈集成必要功能,我们进行了相应的改进。目前openofdm的作者已经把openwifi对openofdm的改进合并进了openofdm。

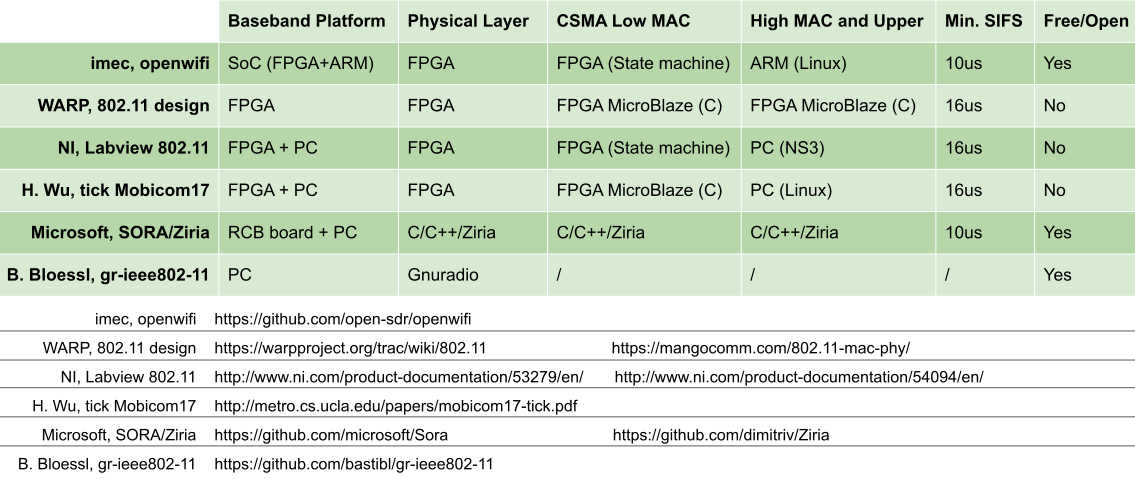

这里用一张表格来说明openwifi和其他项目的异同:

此外,openwifi在软件无线电平台(Zynq SoC+AD9361)方面使用了Analog devices的HDL参考设计(https://github.com/analogdevicesinc/hdl)和它的Linux kernel版本(https://github.com/analogdevicesinc/linux),也使用了Xilinx的一些相关IP core和Xilinx AXI DMA Linux驱动例程,并根据Wi-Fi需求进行了必要的修改,这样可以省去大量的FPGA与射频前端和ARM的接口开发工作。openwifi对Analog devices和Xilinx相应的github资源进行了引用和说明。

openwifi的Linux驱动部分当然也是参考了Linux 里面的各种Wi-Fi芯片的驱动源代码。由于openwifi与Linux之间采用Xilinx AXI DMA接口(片内FPGA和ARM接口),而Linux内核代码中的Wi-Fi芯片大都是基于pcie和USB的,因此只能借鉴而无法直接移植。研究了Linux内核代码里的各种Wi-Fi芯片驱动后,发现台湾realtek公司的rtl8180/8187芯片驱动最为简单。我还专门淘到了一些古老的基于rtl8180/8187芯片的网卡,在Linux下通过学习和修改rtl8180/8187驱动学习真正的Wi-Fi网卡是如何工作的。参照这些芯片驱动,并结合Xilinx给的DMA驱动代码,成功实现了openwifi的mac80211子系统兼容驱动。

为了方便测试和调试,我们还自己开发了整套的Matlab基带收发算法,作为FPGA实现的benchmark。不然有问题时,会不知道目标在哪里。

回顾openwifi的诞生历程,有一点感到很自豪的是项目的主导和主要贡献来自中国开发者。

- 我本人。两年多来几乎是120%的时间在投入。openwifi的工作是这个欧盟H2020项目(ORCA)https://www.orca-project.eu/ 的一部分。主要动机是希望2020年项目结束时能给社区留下一些真正广为使用和流传的东西。当然也十分感谢公司imec支持开源。

- 我的中国同事刘薇(https://telefoonboek.ugent.be/nl/people/802000881827)。把openofdm从USRP N210平台移植到Zynq平台,并且配合我做了大量模块和系统级调试和测试工作。

- openofdm的作者史经浩。看linkedin,这位同学毕业后去了Facebook而且貌似早就不搞Wi-Fi了。但他的openofdm开源实现,使得我们的openwifi在两年内做完成为可能,否则至少还需要额外的半年到一年。

- 来自中国台湾的realtek公司的Wi-Fi芯片驱动是我学习Wi-Fi驱动的主要对象。大名鼎鼎的rtl-sdr电视棒也是这家公司的,堪称业界良心了。

- 最后是一位开发者不是来自中国,但也必须提到:来自埃塞俄比亚的同事Michael Mehari (https://biblio.ugent.be/person/802001220721)。他在我们最初的Matlab仿真代码基础上开发出了ofdm tx FPGA模块。因为他没有看到过和参考过WARP 的PHY tx FPGA实现,所以我们的开源代码是”无污染”的。

最后想说明的一点是:openwifi现阶段一定是在各方面会被商用芯片吊打,这一点毋庸置疑。现阶段它对标的对象也不是商用芯片,而是前面的对比表格中的其他项目。但就像当初Linus Torvalds发布Linux的时候,在强大的商用UNIX面前Linux也只是nothing,谁也不会想到后面Linux竟然变得如此强大。这其中的关键就在于你—广大开发者。感到欣喜的是,我前段时间发布openwifi项目的twitter在短时间内就获得了13万次展示和2万4千次播放量(demo视频),来自东西南北半球的人们纷纷表示“这下有得玩了”。

“Talk is cheap. Show me the code.” – Linus Torvalds

我们动手吧。

]]>